[Hive] Index part #0 for `ROLES` already set

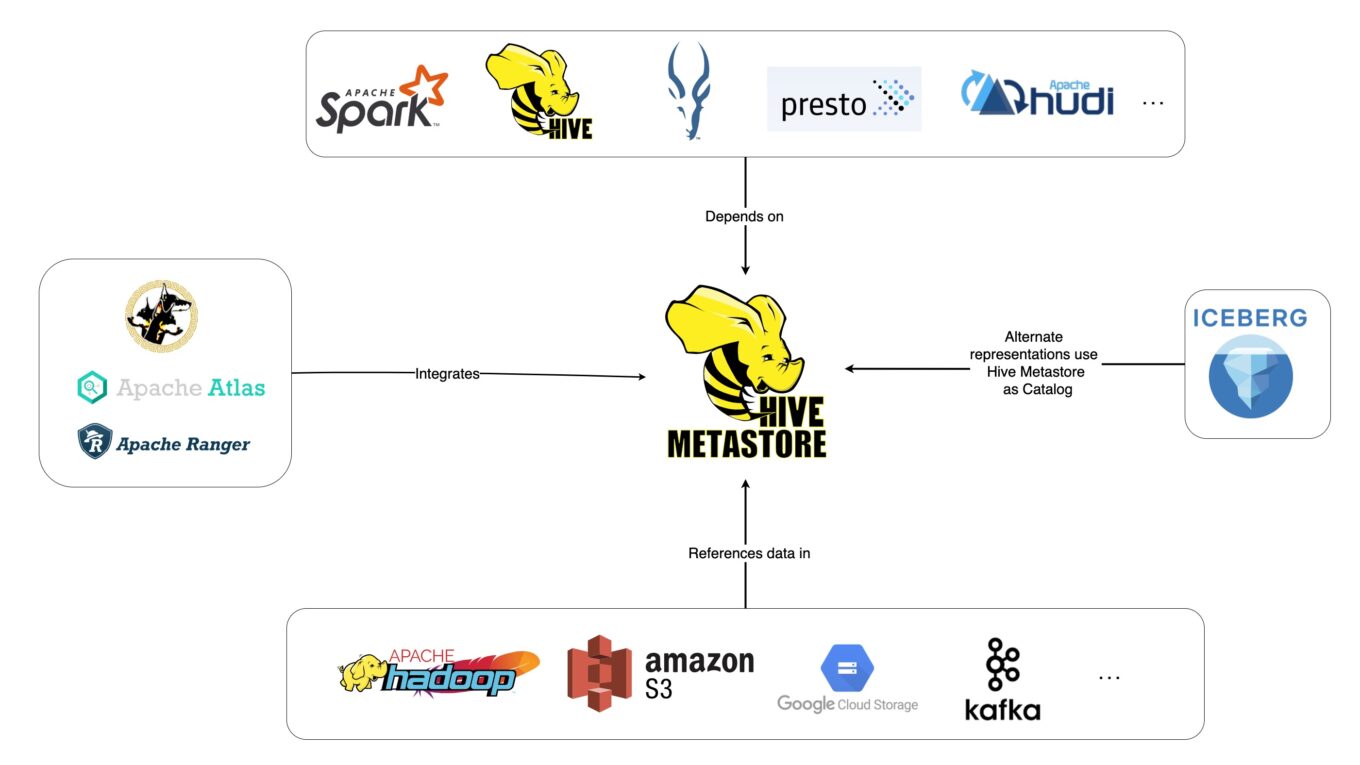

檸檬爸在前面幾篇介紹了有關 Hive Metastore, Hive on Spark 和 Spark Thrift Server 等等的觀念,本篇想要紀錄一個花了不少時間的除錯過程,錯誤發生的環境是在 Spark Standalone 的叢集上啟動的 Spark Thrift Server 嘗試去連接在 MySQL 上的 Hive Metastore,成功使用一段時間之後會時不時地遇到這個 Bug (org.datanucleus.exceptions.NucleusException: Index part #0 for `ROLES` already set),後來的解決方法是重新創建 MySQL 的使用者。

錯誤訊息

以下我們擷取在 Spark Thrift Server 上面觀察到的錯誤訊息,主要有幾個重點:

- Query for candidates of org.apache.hadoop.hive.metastore.model.MRole and subclasses resulted in no possible candidates

- org.datanucleus.exceptions.NucleusException: Index part #0 for `ROLES` already set

02:49:05.966 WARN Query - Query for candidates of org.apache.hadoop.hive.metastore.model.MRole and subclasses resulted in no possible candidates

org.datanucleus.exceptions.NucleusException: Index part 0 for `ROLES` already set

at org.datanucleus.store.rdbms.key.Index.setColumn(Index.java:99) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.table.TableImpl.getExistingIndices(TableImpl.java:1146) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.table.TableImpl.validateIndices(TableImpl.java:566) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.table.TableImpl.validateConstraints(TableImpl.java:387) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.table.ClassTable.validateConstraints(ClassTable.java:3576) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.RDBMSStoreManager$ClassAdder.performTablesValidation(RDBMSStoreManager.java:3471) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.RDBMSStoreManager$ClassAdder.run(RDBMSStoreManager.java:2896) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.AbstractSchemaTransaction.execute(AbstractSchemaTransaction.java:119) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.RDBMSStoreManager.manageClasses(RDBMSStoreManager.java:1627) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.RDBMSStoreManager.getDatastoreClass(RDBMSStoreManager.java:672) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.query.RDBMSQueryUtils.getStatementForCandidates(RDBMSQueryUtils.java:425) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.query.JDOQLQuery.compileQueryFull(JDOQLQuery.java:865) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.query.JDOQLQuery.compileInternal(JDOQLQuery.java:347) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.query.Query.executeQuery(Query.java:1816) ~[datanucleus-core-4.1.17.jar:?]

at org.datanucleus.store.query.Query.executeWithArray(Query.java:1744) ~[datanucleus-core-4.1.17.jar:?]

at org.datanucleus.api.jdo.JDOQuery.executeInternal(JDOQuery.java:368) ~[datanucleus-api-jdo-4.2.4.jar:?]

at org.datanucleus.api.jdo.JDOQuery.execute(JDOQuery.java:228) ~[datanucleus-api-jdo-4.2.4.jar:?]

at org.apache.hadoop.hive.metastore.ObjectStore.getMRole(ObjectStore.java:4429) ~[hive-metastore-2.3.9.jar:2.3.9]

at org.apache.hadoop.hive.metastore.ObjectStore.addRole(ObjectStore.java:4084) ~[hive-metastore-2.3.9.jar:2.3.9]

at sun.reflect.GeneratedMethodAccessor5.invoke(Unknown Source) ~[?:?]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.8.0_362]

at java.lang.reflect.Method.invoke(Method.java:498) ~[?:1.8.0_362]

at org.apache.hadoop.hive.metastore.RawStoreProxy.invoke(RawStoreProxy.java:101) ~[hive-metastore-2.3.9.jar:2.3.9]

at com.sun.proxy.$Proxy41.addRole(Unknown Source) ~[?:?]

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultRoles_core(HiveMetaStore.java:691) ~[hive-metastore-2.3.9.jar:2.3.9]

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultRoles(HiveMetaStore.java:683) ~[hive-metastore-2.3.9.jar:2.3.9]

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.init(HiveMetaStore.java:432) ~[hive-metastore-2.3.9.jar:2.3.9]

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[?:1.8.0_362]

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) ~[?:1.8.0_362]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.8.0_362]

at java.lang.reflect.Method.invoke(Method.java:498) ~[?:1.8.0_362]

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invokeInternal(RetryingHMSHandler.java:148) ~[hive-metastore-2.3.9.jar:2.3.9]

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invoke(RetryingHMSHandler.java:107) ~[hive-metastore-2.3.9.jar:2.3.9]

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.<init>(RetryingHMSHandler.java:79) ~[hive-metastore-2.3.9.jar:2.3.9]

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.getProxy(RetryingHMSHandler.java:92) ~[hive-metastore-2.3.9.jar:2.3.9]

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:6902) ~[hive-metastore-2.3.9.jar:2.3.9]

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:162) ~[hive-metastore-2.3.9.jar:2.3.9]

at org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient.<init>(SessionHiveMetaStoreClient.java:70) ~[hive-exec-2.3.9-core.jar:2.3.9]

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) ~[?:1.8.0_362]

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) ~[?:1.8.0_362]

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) ~[?:1.8.0_362]

at java.lang.reflect.Constructor.newInstance(Constructor.java:423) ~[?:1.8.0_362]

at org.apache.hadoop.hive.metastore.MetaStoreUtils.newInstance(MetaStoreUtils.java:1740) ~[hive-metastore-2.3.9.jar:2.3.9]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.<init>(RetryingMetaStoreClient.java:83) ~[hive-metastore-2.3.9.jar:2.3.9]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:133) ~[hive-metastore-2.3.9.jar:2.3.9]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:104) ~[hive-metastore-2.3.9.jar:2.3.9]

at org.apache.hadoop.hive.ql.metadata.Hive.createMetaStoreClient(Hive.java:3607) ~[hive-exec-2.3.9-core.jar:2.3.9]

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:3659) ~[hive-exec-2.3.9-core.jar:2.3.9]

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:3639) ~[hive-exec-2.3.9-core.jar:2.3.9]

at org.apache.hadoop.hive.ql.metadata.Hive.getAllFunctions(Hive.java:3901) ~[hive-exec-2.3.9-core.jar:2.3.9]

at org.apache.hadoop.hive.ql.metadata.Hive.reloadFunctions(Hive.java:248) ~[hive-exec-2.3.9-core.jar:2.3.9]

at org.apache.hadoop.hive.ql.metadata.Hive.registerAllFunctionsOnce(Hive.java:231) ~[hive-exec-2.3.9-core.jar:2.3.9]

at org.apache.hadoop.hive.ql.metadata.Hive.<init>(Hive.java:395) ~[hive-exec-2.3.9-core.jar:2.3.9]

at org.apache.hadoop.hive.ql.metadata.Hive.create(Hive.java:339) ~[hive-exec-2.3.9-core.jar:2.3.9]

at org.apache.hadoop.hive.ql.metadata.Hive.getInternal(Hive.java:319) ~[hive-exec-2.3.9-core.jar:2.3.9]

at org.apache.hadoop.hive.ql.metadata.Hive.get(Hive.java:288) ~[hive-exec-2.3.9-core.jar:2.3.9]

at org.apache.hive.service.cli.session.HiveSessionImplwithUGI.<init>(HiveSessionImplwithUGI.java:57) ~[spark-hive-thriftserver_2.12-3.3.0.jar:3.3.0]

at org.apache.hive.service.cli.session.SessionManager.openSession(SessionManager.java:263) ~[spark-hive-thriftserver_2.12-3.3.0.jar:3.3.0]

at org.apache.spark.sql.hive.thriftserver.SparkSQLSessionManager.openSession(SparkSQLSessionManager.scala:56) ~[spark-hive-thriftserver_2.12-3.3.0.jar:3.3.0]

at org.apache.hive.service.cli.CLIService.openSessionWithImpersonation(CLIService.java:203) ~[spark-hive-thriftserver_2.12-3.3.0.jar:3.3.0]

at org.apache.hive.service.cli.thrift.ThriftCLIService.getSessionHandle(ThriftCLIService.java:370) ~[spark-hive-thriftserver_2.12-3.3.0.jar:3.3.0]

at org.apache.hive.service.cli.thrift.ThriftCLIService.OpenSession(ThriftCLIService.java:242) ~[spark-hive-thriftserver_2.12-3.3.0.jar:3.3.0]

at org.apache.hive.service.rpc.thrift.TCLIService$Processor$OpenSession.getResult(TCLIService.java:1497) ~[hive-service-rpc-3.1.2.jar:3.1.2]

at org.apache.hive.service.rpc.thrift.TCLIService$Processor$OpenSession.getResult(TCLIService.java:1482) ~[hive-service-rpc-3.1.2.jar:3.1.2]

at org.apache.thrift.ProcessFunction.process(ProcessFunction.java:38) ~[libthrift-0.12.0.jar:0.12.0]

at org.apache.thrift.TBaseProcessor.process(TBaseProcessor.java:39) ~[libthrift-0.12.0.jar:0.12.0]

at org.apache.thrift.server.TServlet.doPost(TServlet.java:83) ~[libthrift-0.12.0.jar:0.12.0]

at org.apache.hive.service.cli.thrift.ThriftHttpServlet.doPost(ThriftHttpServlet.java:180) ~[spark-hive-thriftserver_2.12-3.3.0.jar:3.3.0]

at javax.servlet.http.HttpServlet.service(HttpServlet.java:523) ~[jakarta.servlet-api-4.0.3.jar:4.0.3]

at javax.servlet.http.HttpServlet.service(HttpServlet.java:590) ~[jakarta.servlet-api-4.0.3.jar:4.0.3]

at org.sparkproject.jetty.servlet.ServletHolder.handle(ServletHolder.java:799) ~[spark-core_2.12-3.3.0.jar:3.3.0]

at org.sparkproject.jetty.servlet.ServletHandler.doHandle(ServletHandler.java:550) ~[spark-core_2.12-3.3.0.jar:3.3.0]

at org.sparkproject.jetty.server.handler.ScopedHandler.nextHandle(ScopedHandler.java:233) ~[spark-core_2.12-3.3.0.jar:3.3.0]

at org.sparkproject.jetty.server.session.SessionHandler.doHandle(SessionHandler.java:1624) ~[spark-core_2.12-3.3.0.jar:3.3.0]

at org.sparkproject.jetty.server.handler.ScopedHandler.nextHandle(ScopedHandler.java:233) ~[spark-core_2.12-3.3.0.jar:3.3.0]

at org.sparkproject.jetty.server.handler.ContextHandler.doHandle(ContextHandler.java:1440) ~[spark-core_2.12-3.3.0.jar:3.3.0]

at org.sparkproject.jetty.server.handler.ScopedHandler.nextScope(ScopedHandler.java:188) ~[spark-core_2.12-3.3.0.jar:3.3.0]

at org.sparkproject.jetty.servlet.ServletHandler.doScope(ServletHandler.java:501) ~[spark-core_2.12-3.3.0.jar:3.3.0]

at org.sparkproject.jetty.server.session.SessionHandler.doScope(SessionHandler.java:1594) ~[spark-core_2.12-3.3.0.jar:3.3.0]

at org.sparkproject.jetty.server.handler.ScopedHandler.nextScope(ScopedHandler.java:186) ~[spark-core_2.12-3.3.0.jar:3.3.0]

at org.sparkproject.jetty.server.handler.ContextHandler.doScope(ContextHandler.java:1355) ~[spark-core_2.12-3.3.0.jar:3.3.0]

at org.sparkproject.jetty.server.handler.ScopedHandler.handle(ScopedHandler.java:141) ~[spark-core_2.12-3.3.0.jar:3.3.0]

at org.sparkproject.jetty.server.handler.HandlerWrapper.handle(HandlerWrapper.java:127) ~[spark-core_2.12-3.3.0.jar:3.3.0]

at org.sparkproject.jetty.server.Server.handle(Server.java:516) ~[spark-core_2.12-3.3.0.jar:3.3.0]

at org.sparkproject.jetty.server.HttpChannel.lambda$handle$1(HttpChannel.java:487) ~[spark-core_2.12-3.3.0.jar:3.3.0]

at org.sparkproject.jetty.server.HttpChannel.dispatch(HttpChannel.java:732) ~[spark-core_2.12-3.3.0.jar:3.3.0]

at org.sparkproject.jetty.server.HttpChannel.handle(HttpChannel.java:479) ~[spark-core_2.12-3.3.0.jar:3.3.0]

at org.sparkproject.jetty.server.HttpConnection.onFillable(HttpConnection.java:277) ~[spark-core_2.12-3.3.0.jar:3.3.0]

at org.sparkproject.jetty.io.AbstractConnection$ReadCallback.succeeded(AbstractConnection.java:311) ~[spark-core_2.12-3.3.0.jar:3.3.0]

at org.sparkproject.jetty.io.FillInterest.fillable(FillInterest.java:105) ~[spark-core_2.12-3.3.0.jar:3.3.0]

at org.sparkproject.jetty.io.ssl.SslConnection$DecryptedEndPoint.onFillable(SslConnection.java:555) ~[spark-core_2.12-3.3.0.jar:3.3.0]

at org.sparkproject.jetty.io.ssl.SslConnection.onFillable(SslConnection.java:410) ~[spark-core_2.12-3.3.0.jar:3.3.0]

at org.sparkproject.jetty.io.ssl.SslConnection$2.succeeded(SslConnection.java:164) ~[spark-core_2.12-3.3.0.jar:3.3.0]

at org.sparkproject.jetty.io.FillInterest.fillable(FillInterest.java:105) ~[spark-core_2.12-3.3.0.jar:3.3.0]

at org.sparkproject.jetty.io.ChannelEndPoint$1.run(ChannelEndPoint.java:104) ~[spark-core_2.12-3.3.0.jar:3.3.0]

at org.sparkproject.jetty.util.thread.strategy.EatWhatYouKill.runTask(EatWhatYouKill.java:338) ~[spark-core_2.12-3.3.0.jar:3.3.0]

at org.sparkproject.jetty.util.thread.strategy.EatWhatYouKill.doProduce(EatWhatYouKill.java:315) ~[spark-core_2.12-3.3.0.jar:3.3.0]

at org.sparkproject.jetty.util.thread.strategy.EatWhatYouKill.tryProduce(EatWhatYouKill.java:173) ~[spark-core_2.12-3.3.0.jar:3.3.0]

at org.sparkproject.jetty.util.thread.strategy.EatWhatYouKill.run(EatWhatYouKill.java:131) ~[spark-core_2.12-3.3.0.jar:3.3.0]

at org.sparkproject.jetty.util.thread.ReservedThreadExecutor$ReservedThread.run(ReservedThreadExecutor.java:409) ~[spark-core_2.12-3.3.0.jar:3.3.0]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) ~[?:1.8.0_362]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) ~[?:1.8.0_362]

at java.lang.Thread.run(Thread.java:750) ~[?:1.8.0_362]

02:49:05.987 ERROR RetryingHMSHandler - Retrying HMSHandler after 2000 ms (attempt 4 of 10) with error: javax.jdo.JDOFatalInternalException: Index part #0 for `ROLES` already set

at org.datanucleus.api.jdo.NucleusJDOHelper.getJDOExceptionForNucleusException(NucleusJDOHelper.java:673)

at org.datanucleus.api.jdo.JDOPersistenceManager.jdoMakePersistent(JDOPersistenceManager.java:729)

at org.datanucleus.api.jdo.JDOPersistenceManager.makePersistent(JDOPersistenceManager.java:749)

at org.apache.hadoop.hive.metastore.ObjectStore.addRole(ObjectStore.java:4090)

at sun.reflect.GeneratedMethodAccessor5.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.RawStoreProxy.invoke(RawStoreProxy.java:101)

at com.sun.proxy.$Proxy41.addRole(Unknown Source)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultRoles_core(HiveMetaStore.java:691)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultRoles(HiveMetaStore.java:683)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.init(HiveMetaStore.java:432)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invokeInternal(RetryingHMSHandler.java:148)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invoke(RetryingHMSHandler.java:107)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.<init>(RetryingHMSHandler.java:79)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.getProxy(RetryingHMSHandler.java:92)

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:6902)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:162)

at org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient.<init>(SessionHiveMetaStoreClient.java:70)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.hive.metastore.MetaStoreUtils.newInstance(MetaStoreUtils.java:1740)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.<init>(RetryingMetaStoreClient.java:83)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:133)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:104)

at org.apache.hadoop.hive.ql.metadata.Hive.createMetaStoreClient(Hive.java:3607)

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:3659)

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:3639)

at org.apache.hadoop.hive.ql.metadata.Hive.getAllFunctions(Hive.java:3901)

at org.apache.hadoop.hive.ql.metadata.Hive.reloadFunctions(Hive.java:248)

at org.apache.hadoop.hive.ql.metadata.Hive.registerAllFunctionsOnce(Hive.java:231)

at org.apache.hadoop.hive.ql.metadata.Hive.<init>(Hive.java:395)

at org.apache.hadoop.hive.ql.metadata.Hive.create(Hive.java:339)

at org.apache.hadoop.hive.ql.metadata.Hive.getInternal(Hive.java:319)

at org.apache.hadoop.hive.ql.metadata.Hive.get(Hive.java:288)

at org.apache.hive.service.cli.session.HiveSessionImplwithUGI.<init>(HiveSessionImplwithUGI.java:57)

at org.apache.hive.service.cli.session.SessionManager.openSession(SessionManager.java:263)

at org.apache.spark.sql.hive.thriftserver.SparkSQLSessionManager.openSession(SparkSQLSessionManager.scala:56)

at org.apache.hive.service.cli.CLIService.openSessionWithImpersonation(CLIService.java:203)

at org.apache.hive.service.cli.thrift.ThriftCLIService.getSessionHandle(ThriftCLIService.java:370)

at org.apache.hive.service.cli.thrift.ThriftCLIService.OpenSession(ThriftCLIService.java:242)

at org.apache.hive.service.rpc.thrift.TCLIService$Processor$OpenSession.getResult(TCLIService.java:1497)

at org.apache.hive.service.rpc.thrift.TCLIService$Processor$OpenSession.getResult(TCLIService.java:1482)

at org.apache.thrift.ProcessFunction.process(ProcessFunction.java:38)

at org.apache.thrift.TBaseProcessor.process(TBaseProcessor.java:39)

at org.apache.thrift.server.TServlet.doPost(TServlet.java:83)

at org.apache.hive.service.cli.thrift.ThriftHttpServlet.doPost(ThriftHttpServlet.java:180)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:523)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:590)

at org.sparkproject.jetty.servlet.ServletHolder.handle(ServletHolder.java:799)

at org.sparkproject.jetty.servlet.ServletHandler.doHandle(ServletHandler.java:550)

at org.sparkproject.jetty.server.handler.ScopedHandler.nextHandle(ScopedHandler.java:233)

at org.sparkproject.jetty.server.session.SessionHandler.doHandle(SessionHandler.java:1624)

at org.sparkproject.jetty.server.handler.ScopedHandler.nextHandle(ScopedHandler.java:233)

at org.sparkproject.jetty.server.handler.ContextHandler.doHandle(ContextHandler.java:1440)

at org.sparkproject.jetty.server.handler.ScopedHandler.nextScope(ScopedHandler.java:188)

at org.sparkproject.jetty.servlet.ServletHandler.doScope(ServletHandler.java:501)

at org.sparkproject.jetty.server.session.SessionHandler.doScope(SessionHandler.java:1594)

at org.sparkproject.jetty.server.handler.ScopedHandler.nextScope(ScopedHandler.java:186)

at org.sparkproject.jetty.server.handler.ContextHandler.doScope(ContextHandler.java:1355)

at org.sparkproject.jetty.server.handler.ScopedHandler.handle(ScopedHandler.java:141)

at org.sparkproject.jetty.server.handler.HandlerWrapper.handle(HandlerWrapper.java:127)

at org.sparkproject.jetty.server.Server.handle(Server.java:516)

at org.sparkproject.jetty.server.HttpChannel.lambda$handle$1(HttpChannel.java:487)

at org.sparkproject.jetty.server.HttpChannel.dispatch(HttpChannel.java:732)

at org.sparkproject.jetty.server.HttpChannel.handle(HttpChannel.java:479)

at org.sparkproject.jetty.server.HttpConnection.onFillable(HttpConnection.java:277)

at org.sparkproject.jetty.io.AbstractConnection$ReadCallback.succeeded(AbstractConnection.java:311)

at org.sparkproject.jetty.io.FillInterest.fillable(FillInterest.java:105)

at org.sparkproject.jetty.io.ssl.SslConnection$DecryptedEndPoint.onFillable(SslConnection.java:555)

at org.sparkproject.jetty.io.ssl.SslConnection.onFillable(SslConnection.java:410)

at org.sparkproject.jetty.io.ssl.SslConnection$2.succeeded(SslConnection.java:164)

at org.sparkproject.jetty.io.FillInterest.fillable(FillInterest.java:105)

at org.sparkproject.jetty.io.ChannelEndPoint$1.run(ChannelEndPoint.java:104)

at org.sparkproject.jetty.util.thread.strategy.EatWhatYouKill.runTask(EatWhatYouKill.java:338)

at org.sparkproject.jetty.util.thread.strategy.EatWhatYouKill.doProduce(EatWhatYouKill.java:315)

at org.sparkproject.jetty.util.thread.strategy.EatWhatYouKill.tryProduce(EatWhatYouKill.java:173)

at org.sparkproject.jetty.util.thread.strategy.EatWhatYouKill.run(EatWhatYouKill.java:131)

at org.sparkproject.jetty.util.thread.ReservedThreadExecutor$ReservedThread.run(ReservedThreadExecutor.java:409)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:750)

NestedThrowablesStackTrace:

Index part #0 for `ROLES` already set

org.datanucleus.exceptions.NucleusException: Index part #0 for `ROLES` already set

at org.datanucleus.store.rdbms.key.Index.setColumn(Index.java:99)

at org.datanucleus.store.rdbms.table.TableImpl.getExistingIndices(TableImpl.java:1146)

at org.datanucleus.store.rdbms.table.TableImpl.validateIndices(TableImpl.java:566)

at org.datanucleus.store.rdbms.table.TableImpl.validateConstraints(TableImpl.java:387)

at org.datanucleus.store.rdbms.table.ClassTable.validateConstraints(ClassTable.java:3576)

at org.datanucleus.store.rdbms.RDBMSStoreManager$ClassAdder.performTablesValidation(RDBMSStoreManager.java:3471)

at org.datanucleus.store.rdbms.RDBMSStoreManager$ClassAdder.run(RDBMSStoreManager.java:2896)

at org.datanucleus.store.rdbms.AbstractSchemaTransaction.execute(AbstractSchemaTransaction.java:119)

at org.datanucleus.store.rdbms.RDBMSStoreManager.manageClasses(RDBMSStoreManager.java:1627)

at org.datanucleus.store.rdbms.RDBMSStoreManager.getDatastoreClass(RDBMSStoreManager.java:672)

at org.datanucleus.store.rdbms.RDBMSStoreManager.getPropertiesForGenerator(RDBMSStoreManager.java:2088)

at org.datanucleus.store.AbstractStoreManager.getStrategyValue(AbstractStoreManager.java:1271)

at org.datanucleus.ExecutionContextImpl.newObjectId(ExecutionContextImpl.java:3760)

at org.datanucleus.state.StateManagerImpl.setIdentity(StateManagerImpl.java:2267)

at org.datanucleus.state.StateManagerImpl.initialiseForPersistentNew(StateManagerImpl.java:484)

at org.datanucleus.state.StateManagerImpl.initialiseForPersistentNew(StateManagerImpl.java:120)

at org.datanucleus.state.ObjectProviderFactoryImpl.newForPersistentNew(ObjectProviderFactoryImpl.java:218)

at org.datanucleus.ExecutionContextImpl.persistObjectInternal(ExecutionContextImpl.java:2079)

at org.datanucleus.ExecutionContextImpl.persistObjectWork(ExecutionContextImpl.java:1923)

at org.datanucleus.ExecutionContextImpl.persistObject(ExecutionContextImpl.java:1778)

at org.datanucleus.ExecutionContextThreadedImpl.persistObject(ExecutionContextThreadedImpl.java:217)

at org.datanucleus.api.jdo.JDOPersistenceManager.jdoMakePersistent(JDOPersistenceManager.java:724)

at org.datanucleus.api.jdo.JDOPersistenceManager.makePersistent(JDOPersistenceManager.java:749)

at org.apache.hadoop.hive.metastore.ObjectStore.addRole(ObjectStore.java:4090)

at sun.reflect.GeneratedMethodAccessor5.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.RawStoreProxy.invoke(RawStoreProxy.java:101)

at com.sun.proxy.$Proxy41.addRole(Unknown Source)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultRoles_core(HiveMetaStore.java:691)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultRoles(HiveMetaStore.java:683)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.init(HiveMetaStore.java:432)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invokeInternal(RetryingHMSHandler.java:148)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invoke(RetryingHMSHandler.java:107)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.<init>(RetryingHMSHandler.java:79)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.getProxy(RetryingHMSHandler.java:92)

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:6902)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:162)

at org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient.<init>(SessionHiveMetaStoreClient.java:70)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.hive.metastore.MetaStoreUtils.newInstance(MetaStoreUtils.java:1740)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.<init>(RetryingMetaStoreClient.java:83)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:133)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:104)

at org.apache.hadoop.hive.ql.metadata.Hive.createMetaStoreClient(Hive.java:3607)

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:3659)

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:3639)

at org.apache.hadoop.hive.ql.metadata.Hive.getAllFunctions(Hive.java:3901)

at org.apache.hadoop.hive.ql.metadata.Hive.reloadFunctions(Hive.java:248)

at org.apache.hadoop.hive.ql.metadata.Hive.registerAllFunctionsOnce(Hive.java:231)

at org.apache.hadoop.hive.ql.metadata.Hive.<init>(Hive.java:395)

at org.apache.hadoop.hive.ql.metadata.Hive.create(Hive.java:339)

at org.apache.hadoop.hive.ql.metadata.Hive.getInternal(Hive.java:319)

at org.apache.hadoop.hive.ql.metadata.Hive.get(Hive.java:288)

at org.apache.hive.service.cli.session.HiveSessionImplwithUGI.<init>(HiveSessionImplwithUGI.java:57)

at org.apache.hive.service.cli.session.SessionManager.openSession(SessionManager.java:263)

at org.apache.spark.sql.hive.thriftserver.SparkSQLSessionManager.openSession(SparkSQLSessionManager.scala:56)

at org.apache.hive.service.cli.CLIService.openSessionWithImpersonation(CLIService.java:203)

at org.apache.hive.service.cli.thrift.ThriftCLIService.getSessionHandle(ThriftCLIService.java:370)

at org.apache.hive.service.cli.thrift.ThriftCLIService.OpenSession(ThriftCLIService.java:242)

at org.apache.hive.service.rpc.thrift.TCLIService$Processor$OpenSession.getResult(TCLIService.java:1497)

at org.apache.hive.service.rpc.thrift.TCLIService$Processor$OpenSession.getResult(TCLIService.java:1482)

at org.apache.thrift.ProcessFunction.process(ProcessFunction.java:38)

at org.apache.thrift.TBaseProcessor.process(TBaseProcessor.java:39)

at org.apache.thrift.server.TServlet.doPost(TServlet.java:83)

at org.apache.hive.service.cli.thrift.ThriftHttpServlet.doPost(ThriftHttpServlet.java:180)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:523)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:590)

at org.sparkproject.jetty.servlet.ServletHolder.handle(ServletHolder.java:799)

at org.sparkproject.jetty.servlet.ServletHandler.doHandle(ServletHandler.java:550)

at org.sparkproject.jetty.server.handler.ScopedHandler.nextHandle(ScopedHandler.java:233)

at org.sparkproject.jetty.server.session.SessionHandler.doHandle(SessionHandler.java:1624)

at org.sparkproject.jetty.server.handler.ScopedHandler.nextHandle(ScopedHandler.java:233)

at org.sparkproject.jetty.server.handler.ContextHandler.doHandle(ContextHandler.java:1440)

at org.sparkproject.jetty.server.handler.ScopedHandler.nextScope(ScopedHandler.java:188)

at org.sparkproject.jetty.servlet.ServletHandler.doScope(ServletHandler.java:501)

at org.sparkproject.jetty.server.session.SessionHandler.doScope(SessionHandler.java:1594)

at org.sparkproject.jetty.server.handler.ScopedHandler.nextScope(ScopedHandler.java:186)

at org.sparkproject.jetty.server.handler.ContextHandler.doScope(ContextHandler.java:1355)

at org.sparkproject.jetty.server.handler.ScopedHandler.handle(ScopedHandler.java:141)

at org.sparkproject.jetty.server.handler.HandlerWrapper.handle(HandlerWrapper.java:127)

at org.sparkproject.jetty.server.Server.handle(Server.java:516)

at org.sparkproject.jetty.server.HttpChannel.lambda$handle$1(HttpChannel.java:487)

at org.sparkproject.jetty.server.HttpChannel.dispatch(HttpChannel.java:732)

at org.sparkproject.jetty.server.HttpChannel.handle(HttpChannel.java:479)

at org.sparkproject.jetty.server.HttpConnection.onFillable(HttpConnection.java:277)

at org.sparkproject.jetty.io.AbstractConnection$ReadCallback.succeeded(AbstractConnection.java:311)

at org.sparkproject.jetty.io.FillInterest.fillable(FillInterest.java:105)

at org.sparkproject.jetty.io.ssl.SslConnection$DecryptedEndPoint.onFillable(SslConnection.java:555)

at org.sparkproject.jetty.io.ssl.SslConnection.onFillable(SslConnection.java:410)

at org.sparkproject.jetty.io.ssl.SslConnection$2.succeeded(SslConnection.java:164)

at org.sparkproject.jetty.io.FillInterest.fillable(FillInterest.java:105)

at org.sparkproject.jetty.io.ChannelEndPoint$1.run(ChannelEndPoint.java:104)

at org.sparkproject.jetty.util.thread.strategy.EatWhatYouKill.runTask(EatWhatYouKill.java:338)

at org.sparkproject.jetty.util.thread.strategy.EatWhatYouKill.doProduce(EatWhatYouKill.java:315)

at org.sparkproject.jetty.util.thread.strategy.EatWhatYouKill.tryProduce(EatWhatYouKill.java:173)

at org.sparkproject.jetty.util.thread.strategy.EatWhatYouKill.run(EatWhatYouKill.java:131)

at org.sparkproject.jetty.util.thread.ReservedThreadExecutor$ReservedThread.run(ReservedThreadExecutor.java:409)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:750)其他類似的 Hive 錯誤

嘗試搜尋有類似的 Hive 錯誤,但是沒有直接的幫助。

解決方法

後來在觀察到只有特定的 MySQL Users 會有以上的現象,在利用以下的指令重新建立 MySQL 使用者之後,成功解決以上的問題:

SELECT user, host FROM mysql.user;

DROP USER yourUser@'%';

CREATE USER yourUser@'%' IDENTIFIED BY 'yourPasswd';

GRANT ALL PRIVILEGES ON yourDB.* TO 'yourUser'@'%';

SHOW GRANTS FOR 'yourUser'@'%';