[HDFS] 安裝 HDFS (Hadoop-3.1.2)

關於安裝 Hdfs 的解說文,網路上有許多資源,如果是 Hadoop-2.8.2 的安裝解說,萌爸覺得痞客邦的鐵人賽 Hadoop ecosystem 工具簡介, 安裝教學與各種情境使用 講解的很詳細,本篇想要呈現在安裝新的 Hadoop-3.1.2 的時候會有什麼樣的問題。本篇主要參考 hadoop-3.1.2 Single Node Cluster 設定的連結 Link 。

安裝環境與安裝版本:

- Java version “12.0.1”

- Hadoop-3.1.2

core-site.xml (…/etc/hadoop/core-site.xml)

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>hdfs-site.xml (…/etc/hadoop/hdfs-site.xml)

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/opt/hadoop-3.1.2/data/namenode</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/opt/hadoop-3.1.2/data/datanode</value>

</property>

</configuration>免密碼設定

sudo ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

sudo cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

sudo chmod 0600 ~/.ssh/authorized_keys格式化 NameNode

hdfs namenode -format執行以上的格式化指令會得到

STARTUP_MSG: build = https://github.com/apache/hadoop.git -r 1019dde65bcf12e05ef48ac71e84550d589e5d9a; compiled by 'sunilg' on 2019-01-29T01:39Z

STARTUP_MSG: java = 12.0.1

************************************************************/

2019-07-28 21:31:55,002 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

2019-07-28 21:31:55,159 INFO namenode.NameNode: createNameNode [-format]

2019-07-28 21:31:55,359 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2019-07-28 21:31:55,829 INFO common.Util: Assuming 'file' scheme for path /opt/hadoop-3.1.2/data/namenode in configuration.

2019-07-28 21:31:55,830 INFO common.Util: Assuming 'file' scheme for path /opt/hadoop-3.1.2/data/namenode in configuration.

Formatting using clusterid: CID-c830415e-428c-4edf-9340-cc672800357e

2019-07-28 21:31:55,880 INFO namenode.FSEditLog: Edit logging is async:true

2019-07-28 21:31:55,909 INFO namenode.FSNamesystem: KeyProvider: null

2019-07-28 21:31:55,910 INFO namenode.FSNamesystem: fsLock is fair: true

2019-07-28 21:31:55,911 INFO namenode.FSNamesystem: Detailed lock hold time metrics enabled: false

2019-07-28 21:31:55,984 INFO namenode.FSNamesystem: fsOwner = root (auth:SIMPLE)

2019-07-28 21:31:55,984 INFO namenode.FSNamesystem: supergroup = supergroup

2019-07-28 21:31:55,984 INFO namenode.FSNamesystem: isPermissionEnabled = true

2019-07-28 21:31:55,984 INFO namenode.FSNamesystem: HA Enabled: false

2019-07-28 21:31:56,050 INFO common.Util: dfs.datanode.fileio.profiling.sampling.percentage set to 0. Disabling file IO profiling

2019-07-28 21:31:56,075 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit: configured=1000, counted=60, effected=1000

2019-07-28 21:31:56,075 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true

2019-07-28 21:31:56,083 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000

2019-07-28 21:31:56,083 INFO blockmanagement.BlockManager: The block deletion will start around 2019 7月 28 21:31:56

2019-07-28 21:31:56,087 INFO util.GSet: Computing capacity for map BlocksMap

2019-07-28 21:31:56,087 INFO util.GSet: VM type = 64-bit

2019-07-28 21:31:56,090 INFO util.GSet: 2.0% max memory 2 GB = 41.0 MB

2019-07-28 21:31:56,090 INFO util.GSet: capacity = 2^22 = 4194304 entries

2019-07-28 21:31:56,126 INFO blockmanagement.BlockManager: dfs.block.access.token.enable = false

2019-07-28 21:31:56,136 INFO Configuration.deprecation: No unit for dfs.namenode.safemode.extension(30000) assuming MILLISECONDS

2019-07-28 21:31:56,136 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.threshold-pct = 0.9990000128746033

2019-07-28 21:31:56,137 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.min.datanodes = 0

2019-07-28 21:31:56,137 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.extension = 30000

2019-07-28 21:31:56,137 INFO blockmanagement.BlockManager: defaultReplication = 1

2019-07-28 21:31:56,137 INFO blockmanagement.BlockManager: maxReplication = 512

2019-07-28 21:31:56,137 INFO blockmanagement.BlockManager: minReplication = 1

2019-07-28 21:31:56,137 INFO blockmanagement.BlockManager: maxReplicationStreams = 2

2019-07-28 21:31:56,137 INFO blockmanagement.BlockManager: redundancyRecheckInterval = 3000ms

2019-07-28 21:31:56,138 INFO blockmanagement.BlockManager: encryptDataTransfer = false

2019-07-28 21:31:56,138 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000

2019-07-28 21:31:56,178 INFO namenode.FSDirectory: GLOBAL serial map: bits=24 maxEntries=16777215

2019-07-28 21:31:56,203 INFO util.GSet: Computing capacity for map INodeMap

2019-07-28 21:31:56,204 INFO util.GSet: VM type = 64-bit

2019-07-28 21:31:56,204 INFO util.GSet: 1.0% max memory 2 GB = 20.5 MB

2019-07-28 21:31:56,204 INFO util.GSet: capacity = 2^21 = 2097152 entries

2019-07-28 21:31:56,212 INFO namenode.FSDirectory: ACLs enabled? false

2019-07-28 21:31:56,212 INFO namenode.FSDirectory: POSIX ACL inheritance enabled? true

2019-07-28 21:31:56,212 INFO namenode.FSDirectory: XAttrs enabled? true

2019-07-28 21:31:56,213 INFO namenode.NameNode: Caching file names occurring more than 10 times

2019-07-28 21:31:56,225 INFO snapshot.SnapshotManager: Loaded config captureOpenFiles: false, skipCaptureAccessTimeOnlyChange: false, snapshotDiffAllowSnapRootDescendant: true, maxSnapshotLimit: 65536

2019-07-28 21:31:56,230 INFO snapshot.SnapshotManager: SkipList is disabled

2019-07-28 21:31:56,236 INFO util.GSet: Computing capacity for map cachedBlocks

2019-07-28 21:31:56,236 INFO util.GSet: VM type = 64-bit

2019-07-28 21:31:56,237 INFO util.GSet: 0.25% max memory 2 GB = 5.1 MB

2019-07-28 21:31:56,237 INFO util.GSet: capacity = 2^19 = 524288 entries

2019-07-28 21:31:56,259 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.window.num.buckets = 10

2019-07-28 21:31:56,259 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.num.users = 10

2019-07-28 21:31:56,259 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.windows.minutes = 1,5,25

2019-07-28 21:31:56,264 INFO namenode.FSNamesystem: Retry cache on namenode is enabled

2019-07-28 21:31:56,264 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis

2019-07-28 21:31:56,268 INFO util.GSet: Computing capacity for map NameNodeRetryCache

2019-07-28 21:31:56,268 INFO util.GSet: VM type = 64-bit

2019-07-28 21:31:56,268 INFO util.GSet: 0.029999999329447746% max memory 2 GB = 629.1 KB

2019-07-28 21:31:56,268 INFO util.GSet: capacity = 2^16 = 65536 entries

2019-07-28 21:31:56,312 INFO namenode.FSImage: Allocated new BlockPoolId: BP-952615036-192.168.1.35-1564342316302

2019-07-28 21:31:56,356 INFO common.Storage: Storage directory /opt/hadoop-3.1.2/data/namenode has been successfully formatted.

2019-07-28 21:31:56,383 INFO namenode.FSImageFormatProtobuf: Saving image file /opt/hadoop-3.1.2/data/namenode/current/fsimage.ckpt_0000000000000000000 using no compression

2019-07-28 21:31:56,512 INFO namenode.FSImageFormatProtobuf: Image file /opt/hadoop-3.1.2/data/namenode/current/fsimage.ckpt_0000000000000000000 of size 391 bytes saved in 0 seconds .

2019-07-28 21:31:56,544 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

2019-07-28 21:31:56,553 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at LindeMBP/192.168.1.35

************************************************************/按照原本的教學到這一步的時候,只要啟動 ../sbin/start-dfs.sh 就能夠成功啟動 Hdfs Namenode 與 Datanode,但是此時出現以下的錯誤訊息!

Starting namenodes on [localhost]

ERROR: Attempting to operate on hdfs namenode as root

ERROR: but there is no HDFS_NAMENODE_USER defined. Aborting operation.

Starting datanodes

ERROR: Attempting to operate on hdfs datanode as root

ERROR: but there is no HDFS_DATANODE_USER defined. Aborting operation.

Starting secondary namenodes [LindeMBP]

ERROR: Attempting to operate on hdfs secondarynamenode as root

ERROR: but there is no HDFS_SECONDARYNAMENODE_USER defined. Aborting operation.

2019-07-28 21:43:09,844 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable這是 Hadoop-2.8.2 以前不會出現的!解決辦法是在 /opt/hadoop-3.1.2/sbin/start-dfs.sh 加入以下的訊息:

HDFS_DATANODE_USER=使用者名稱

HADOOP_DATANODE_SECURE_USER=使用者名稱

HDFS_NAMENODE_USER=使用者名稱

HDFS_SECONDARYNAMENODE_USER=使用者名稱接下來再重新執行 ./start-dfs.sh 可以得到以下訊息:

lindembp:sbin linyuting$ ./start-dfs.sh

Starting namenodes on [localhost]

Starting datanodes

Starting secondary namenodes [lindembp]

lindembp: ssh: Could not resolve hostname lindembp: nodename nor servname provided, or not known

2019-07-31 22:23:13,273 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable結果利用 jps 可以觀察到 NameNode, DataNode 已經被開啟了:

4883 NameNode

1158 OracleIdeLauncher

4968 DataNode

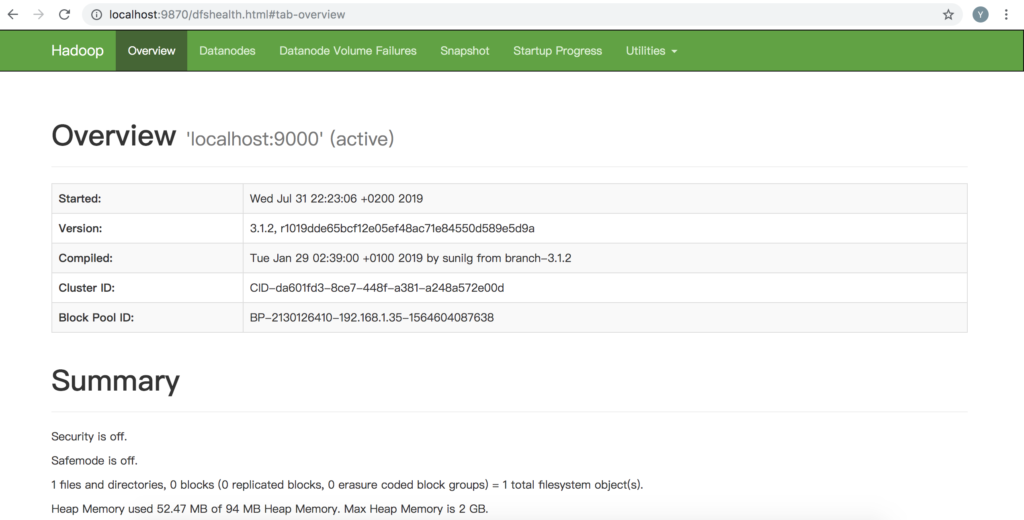

5049 Jps利用瀏覽器查看 localhost:9870,跟 Hadoop 2 要查看的 port (50070) 不太一樣,這邊要注意一下!

- 需要關注的地方是 Security is off,更多的 property 要被設定進 -site.xml 才能夠開啟安全性的工能!

- 記得要打 hdfs dfs -mkdir /home 新增一個子目錄,然後 hdfs dfs -ls / 查詢一下:)

如果使用想要使用 root 當作執行者的話會出現以下狀況,目前還不知道要怎麼解決!

bash-5.0: whoami

root

bash-5.0:./start-dfs.sh

Starting namenodes on [localhost]

/opt/hadoop-3.1.2/bin/../libexec/hadoop-functions.sh: line 398: syntax error near unexpected token `<'

/opt/hadoop-3.1.2/bin/../libexec/hadoop-functions.sh: line 398: ` done < <(for text in "${input[@]}"; do'

/opt/hadoop-3.1.2/bin/../libexec/hadoop-config.sh: line 70: hadoop_deprecate_envvar: command not found

/opt/hadoop-3.1.2/bin/../libexec/hadoop-config.sh: line 87: hadoop_bootstrap: command not found

/opt/hadoop-3.1.2/bin/../libexec/hadoop-config.sh: line 104: hadoop_parse_args: command not found

/opt/hadoop-3.1.2/bin/../libexec/hadoop-config.sh: line 105: shift: : numeric argument required

/opt/hadoop-3.1.2/bin/../libexec/hadoop-config.sh: line 244: hadoop_need_reexec: command not found

/opt/hadoop-3.1.2/bin/../libexec/hadoop-config.sh: line 252: hadoop_verify_user_perm: command not found

/opt/hadoop-3.1.2/bin/hdfs: line 213: hadoop_validate_classname: command not found

/opt/hadoop-3.1.2/bin/hdfs: line 214: hadoop_exit_with_usage: command not found

/opt/hadoop-3.1.2/bin/../libexec/hadoop-config.sh: line 263: hadoop_add_client_opts: command not found

/opt/hadoop-3.1.2/bin/../libexec/hadoop-config.sh: line 270: hadoop_subcommand_opts: command not found

/opt/hadoop-3.1.2/bin/../libexec/hadoop-config.sh: line 273: hadoop_generic_java_subcmd_handler: command not found