[BigData] 整合 BeeGFS 到 K8S 生態系

雖然公有雲的服務例如 AWS, Azure, GCP 已經逐漸普及了,但是私有雲 HPC 的市場還是一直有相對的份額,不同於公有雲的儲存服務,在佈建私有雲的時候,儲存 (Storage) 的解決方案仍然是一個需要花費大量心力的議題,本篇紀錄如何將 HPC 儲存方案之一的 BeeGFS 掛載到 K8S 的生態系裡面。

Limitation of NFS

一般來說 NFS 是一個相對普及的檔案分享解決方案,但是根據 Azure 的報告,NFS 因為單一檔案中就只能從單一伺服器而來,所以比較容易遇到 VM Disk 的瓶頸,80,000 IOPS and 2 Gbps of throughput。嘗試以高於此速率從單一 NFS 伺服器存取未快取的資料將導致限制。

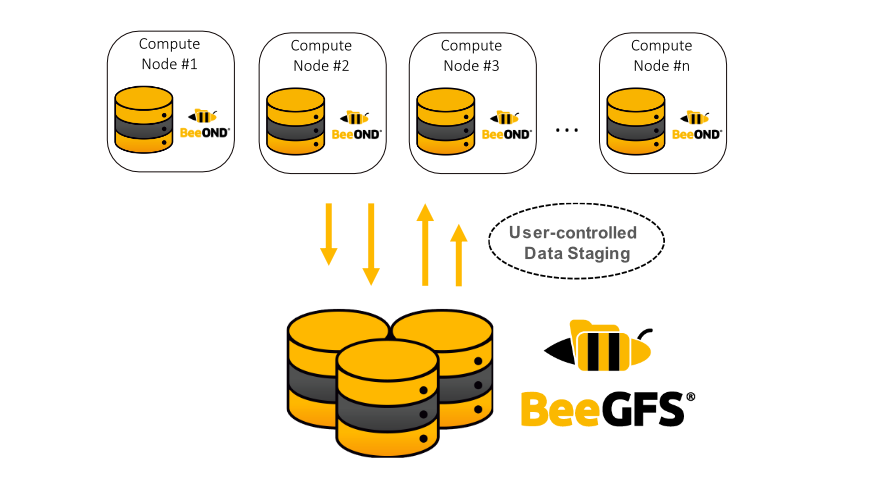

Parallel File System

所以針對 HPC 的儲存的解決方案一般來說有以下:

BeeGFS 部署

我們參考 BeeGFS 官方網站,使用 QuickStart 的安裝方法,也有 container 的做法,我們在 AWS 開一台以 RedHat 9 為基礎的 EC2 (t2.medium) 當作 BeeGFS 的服務器,在上面安裝:

ssh -i "beegfs.pem" ec2-user@ec2-54-242-88-124.compute-1.amazonaws.com

yum install -y wget

wget -O /etc/yum.repos.d/beegfs_rhel8.repo https://www.beegfs.io/release/beegfs_7.4.5/dists/beegfs-rhel8.repo

yum install -y beegfs-mgmtd

yum install -y beegfs-meta

yum install -y beegfs-storage

/opt/beegfs/sbin/beegfs-setup-mgmtd -p /data/beegfs/beegfs_mgmtd

/opt/beegfs/sbin/beegfs-setup-meta -p /data/beegfs/beegfs_meta -s 2 -m 172.31.30.229

/opt/beegfs/sbin/beegfs-setup-storage -p /mnt/myraid1/beegfs_storage -s 3 -i 301 -m 172.31.30.229

systemctl start beegfs-mgmtd

systemctl status beegfs-mgmtd

● beegfs-mgmtd.service - BeeGFS Management Server

Loaded: loaded (/usr/lib/systemd/system/beegfs-mgmtd.service; enabled; preset: disabled)

Active: active (running) since Fri 2024-12-20 07:52:42 UTC; 4h 7min ago

Docs: http://www.beegfs.com/content/documentation/

Main PID: 15043 (beegfs-mgmtd/Ma)

Tasks: 11 (limit: 22900)

Memory: 19.0M

CPU: 1.873s

CGroup: /system.slice/beegfs-mgmtd.service

└─15043 /opt/beegfs/sbin/beegfs-mgmtd cfgFile=/etc/beegfs/beegfs-mgmtd.conf runDaemonized=false

Dec 20 07:52:42 i systemd[1]: Started BeeGFS Management Server.

systemctl start beegfs-meta

systemctl status beegfs-meta

● beegfs-meta.service - BeeGFS Metadata Server

Loaded: loaded (/usr/lib/systemd/system/beegfs-meta.service; enabled; preset: disabled)

Active: active (running) since Fri 2024-12-20 07:54:14 UTC; 4h 6min ago

Docs: http://www.beegfs.com/content/documentation/

Main PID: 15065 (beegfs-meta/Mai)

Tasks: 23 (limit: 22900)

Memory: 108.1M

CPU: 2.715s

CGroup: /system.slice/beegfs-meta.service

└─15065 /opt/beegfs/sbin/beegfs-meta cfgFile=/etc/beegfs/beegfs-meta.conf runDaemonized=false

Dec 20 07:54:14 i systemd[1]: Started BeeGFS Metadata Server.

systemctl start beegfs-storage

systemctl status beegfs-storage

● beegfs-storage.service - BeeGFS Storage Server

Loaded: loaded (/usr/lib/systemd/system/beegfs-storage.service; enabled; preset: disabled)

Active: active (running) since Fri 2024-12-20 07:54:50 UTC; 4h 6min ago

Docs: http://www.beegfs.com/content/documentation/

Main PID: 15094 (beegfs-storage/)

Tasks: 21 (limit: 22900)

Memory: 51.7M

CPU: 1.177s

CGroup: /system.slice/beegfs-storage.service

└─15094 /opt/beegfs/sbin/beegfs-storage cfgFile=/etc/beegfs/beegfs-storage.conf runDaemonized=false

Dec 20 07:54:50 i systemd[1]: Started BeeGFS Storage Server.Note: 需要把 Authentication Password 放到 /etc/beegfs/beegfs-mgmtd.conf 裡面,例如 connAuthFile = /home/password.txt,另外 /etc/beegfs/beegfs-meta.conf 與 /etc/beegfs/beegfs-storage.conf 也要把 connAuthFile 填上去。

利用 BeeGFS Client 確認 BeeGFS 已經啟動

利用 BeeGFS Client 可以確認 BeeGFS 服務是否已經啟動?

yum install beegfs-client beegfs-helperd beegfs-utils

/opt/beegfs/sbin/beegfs-setup-client -m 172.31.20.2

systemctl start beegfs-helperd

systemctl start beegfs-client利用以上的指令我們就可以將 BeeGFS 檔案系統掛載到某一個特定的位置例如:/mnt/beegfs

使用 BeeGFS CSI Driver 串接 Kubernetes

在 BeeGFS 服務啟動之後,接下來就是要把 BeeGFS 變成是一個 PVC 與 PV 掛載到 Pod 上面,主要參考的是 BeeGFS CSI Driver 的 Github Project,主要分成兩個步驟:

BeeGFS CSI Driver 要先啟動起來

依照 https://github.com/ThinkParQ/beegfs-csi-driver/blob/master/docs/deployment.md#beegfs-client-parameters 的講解主要又分成幾個步驟:

-

- Kubernetes Node Preparation (RedHat)

yum install -y wget wget -O /etc/yum.repos.d/beegfs_rhel8.repo https://www.beegfs.io/release/beegfs_7.4.5/dists/beegfs-rhel8.repo rpm --import https://www.beegfs.io/release/beegfs_7.4.5/gpg/GPG-KEY-beegfs yum update yum install -y beegfs-client-dkms beegfs-helperd beegfs-utils vim /etc/beegfs/beegfs-helperd.conf systemctl start beegfs-helperd - Kubernetes Node Preparation (Ubuntu 22.04)

apt-get install -y wget wget -O /etc/apt/sources.list.d/ https://www.beegfs.io/release/beegfs_7.4.5/dists/beegfs-jammy.list wget -q https://www.beegfs.io/release/beegfs_7.4.5/gpg/GPG-KEY-beegfs -O- | apt-key add - apt-get update apt-get install -y beegfs-client-dkms beegfs-helperd beegfs-utils vim /etc/beegfs/beegfs-helperd.conf systemctl start beegfs-helperd - Kubernetes Deployment

git clone https://github.com/ThinkParQ/beegfs-csi-driver.git cp -r deploy/k8s/overlays/default deploy/k8s/overlays/my-overlay kubectl apply -k deploy/k8s/overlays/my-overlay kubectl get pods -n beegfs-csi NAME READY STATUS RESTARTS AGE csi-beegfs-controller-0 3/3 Running 0 3h32m csi-beegfs-node-krfql 3/3 Running 0 3h3 - Note: 修改 deploy/k8s/overlays/my-overlay/csi-beegfs-config.yaml 的時候,必須要在 boolean 的值的前後加 quote。

# Copyright 2021 NetApp, Inc. All Rights Reserved. # Licensed under the Apache License, Version 2.0. # Use this file as instructed in the General Configuration section of /docs/deployment.md. See # /deploy/k8s/examples/csi-beegfs-config.yaml for a worst-case example of what to put in this file. Kustomize will # automatically transform this file into a correct ConfigMap readable by the deployed driver. If this file is left # unmodified, the driver will deploy correctly with no custom configuration. config: beegfsClientConf: connDisableAuthentication: "false"

- Kubernetes Node Preparation (RedHat)

否則沒有辦法把 controller 跟 node 開起來 https://github.com/ThinkParQ/beegfs-csi-driver/issues/37

在 Driver 的基礎上創建 PV, PVC 與 Pod

kubectl apply -f examples/k8s/static/static-pv.yaml

kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS VOLUMEATTRIBUTESCLASS REASON AGE

csi-beegfs-static-pv 100Gi RWX Retain Bound default/csi-beegfs-static-pvc <unset> 3h30m

kubectl apply -f examples/k8s/static/static-pvc.yaml

kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

csi-beegfs-static-pvc Bound csi-beegfs-static-pv 100Gi RWX <unset> 3h30m

kubectl get pods

NAME READY STATUS RESTARTS AGE

csi-beegfs-static-app 1/1 Running 0 3h30m

linyuting@MacbookDeYuting static % kubectl describe pod csi-beegfs-static-app

Name: csi-beegfs-static-app

Namespace: default

Priority: 0

Service Account: default

Node: ip-172-31-34-220.ec2.internal/172.31.34.220

Start Time: Fri, 20 Dec 2024 16:57:28 +0800

Labels: <none>

Annotations: <none>

Status: Running

IP: 172.31.33.134

IPs:

IP: 172.31.33.134

Containers:

csi-beegfs-static-app:

Container ID: containerd://790125e868070b89654c538ea224b55a35e9330645765485394f9ef6f70de1ec

Image: alpine:latest

Image ID: docker.io/library/alpine@sha256:21dc6063fd678b478f57c0e13f47560d0ea4eeba26dfc947b2a4f81f686b9f45

Port: <none>

Host Port: <none>

Command:

ash

-c

touch "/mnt/static/touched-by-k8s-name-${POD_UUID}" && sleep 7d

State: Running

Started: Fri, 20 Dec 2024 16:57:29 +0800

Ready: True

Restart Count: 0

Environment:

POD_UUID: (v1:metadata.uid)

Mounts:

/mnt/static from csi-beegfs-static-volume (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-2p7dg (ro)

Conditions:

Type Status

PodReadyToStartContainers True

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

csi-beegfs-static-volume:

Type: PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace)

ClaimName: csi-beegfs-static-pvc

ReadOnly: false

kube-api-access-2p7dg:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubern

One thought on “[BigData] 整合 BeeGFS 到 K8S 生態系”