[Hive] 在 Spark 存取自己的 Hive Metastore

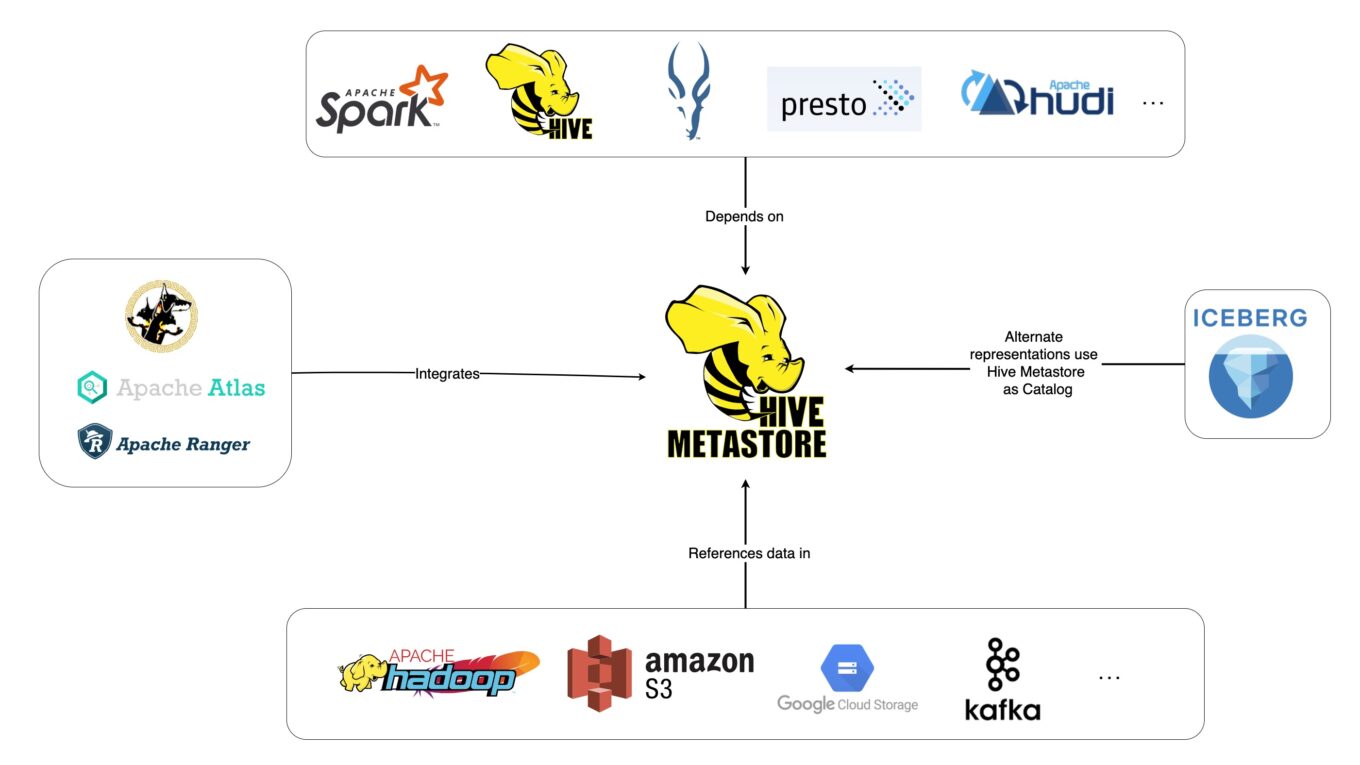

擁有一個自己的 Hive Metastore 的好處是方便管理自己的資料,利用 Hive Metastore 可以把資料表與大數據平台上面的資料關連起來。Hive Metastore 可以部署在不同的資料庫上面,例如 MySQL 或是 Microsoft SQL Database。

初始化 Hive Metastore

可以使用 Apache Hive 專案中的工具 schematool,首先可以從 https://dlcdn.apache.org/hive/ 下載適合的 Apache Hive 版本,例如以 Apache Hive 3.1.3 為例,執行以下的指令:

wget https://dlcdn.apache.org/hive/hive-3.1.3/apache-hive-3.1.3-bin.tar.gz

tar -xvzf apache-hive-3.1.3-bin.tar.gz

cd apache-hive-3.1.3-bin/conf

cp hive-default.xml.template hive-site.xml參考另外一篇設定 Hive 到 MySQL 上 在複製 hive-site.xml 之後取代掉下面列出來的參數之後,執行最後一行指令。

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://metastoreserver.mysql.database.azure.com:3306/metastoredb</value>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.cj.jdbc.Driver</value>

<name>javax.jdo.option.ConnectionUserName</name>

<value>admin</value>

<name>javax.jdo.option.ConnectionPassword</name>

<value>password</value>最後在執行

schematool -dbType mysql -initSchema備註:在執行最後這行程式的時候發生一連串的問題

guava-19.0.jar 版本衝突

在 /apache-hive-3.1.3-bin/lib 裡面有一個預設的 guava-19.0.jar 這個可能會跟既有的 guava 版本衝突出現下方的錯誤訊息,移除就可以解決這個報錯。

root@c4916eacdf8342e0b5ba9cf10a601a11000000:/tmp/apache-hive-3.1.3-bin/bin@ ./schematool -dbType mysql -userName atgenomix@metastoretest -initSchema

WARNING: HADOOP_PREFIX has been replaced by HADOOP_HOME. Using value of HADOOP_PREFIX.

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/tmp/apache-hive-3.1.3-bin/lib/log4j-slf4j-impl-2.17.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/hadoop-3.3.0/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html@multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Exception in thread "main" java.lang.NoSuchMethodError: com.google.common.base.Preconditions.checkArgument(ZLjava/lang/String;Ljava/lang/Object;)V

at org.apache.hadoop.conf.Configuration.set(Configuration.java:1380)

at org.apache.hadoop.conf.Configuration.set(Configuration.java:1361)

at org.apache.hadoop.mapred.JobConf.setJar(JobConf.java:536)

at org.apache.hadoop.mapred.JobConf.setJarByClass(JobConf.java:554)

at org.apache.hadoop.mapred.JobConf.<init>(JobConf.java:448)

at org.apache.hadoop.hive.conf.HiveConf.initialize(HiveConf.java:5144)

at org.apache.hadoop.hive.conf.HiveConf.<init>(HiveConf.java:5107)

at org.apache.hive.beeline.HiveSchemaTool.<init>(HiveSchemaTool.java:96)

at org.apache.hive.beeline.HiveSchemaTool.main(HiveSchemaTool.java:1473)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.util.RunJar.run(RunJar.java:323)

at org.apache.hadoop.util.RunJar.main(RunJar.java:236)ClassNotFoundException: com.mysql.cj.jdbc.Driver

要解決這個問題可以利用以下的指令下載到 apache-hive-4.0.0-alpha-1-bin/lib

wget https://repo1.maven.org/maven2/mysql/mysql-connector-java/8.0.30/mysql-connector-java-8.0.30.jarroot@c4916eacdf8342e0b5ba9cf10a601a11000000:/tmp/apache-hive-4.0.0-alpha-1-bin/bin@ ./schematool -dbType mysql -initSchema

WARNING: HADOOP_PREFIX has been replaced by HADOOP_HOME. Using value of HADOOP_PREFIX.

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/tmp/apache-hive-4.0.0-alpha-1-bin/lib/log4j-slf4j-impl-2.17.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/hadoop-3.3.0/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html@multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Initializing the schema to: 4.0.0-alpha-1

Metastore connection URL: jdbc:mysql://metastoreserver.mysql.database.azure.com:3306/metastoredb

Metastore connection Driver : com.mysql.cj.jdbc.Driver

Metastore connection User: admin

Failed to load driver

Underlying cause: java.lang.ClassNotFoundException : com.mysql.cj.jdbc.Driver

Use --verbose for detailed stacktrace.

*** schemaTool failed ***成功初始化 database 的訊息

root@c4916eacdf8342e0b5ba9cf10a601a11000000:/tmp/apache-hive-4.0.0-alpha-1-bin/bin@ ./schematool -dbType mysql -userName atgenomix@metastoretest -initSchema

WARNING: HADOOP_PREFIX has been replaced by HADOOP_HOME. Using value of HADOOP_PREFIX.

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/tmp/apache-hive-4.0.0-alpha-1-bin/lib/log4j-slf4j-impl-2.17.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/hadoop-3.3.0/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html@multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Initializing the schema to: 4.0.0-alpha-1

Metastore connection URL: jdbc:mysql://metastoreserver.mysql.database.azure.com:3306/metastoredb

Metastore connection Driver : com.mysql.cj.jdbc.Driver

Metastore connection User: admin@metastoreserver

Starting metastore schema initialization to 4.0.0-alpha-1

Initialization script hive-schema-4.0.0-alpha-1.mysql.sql

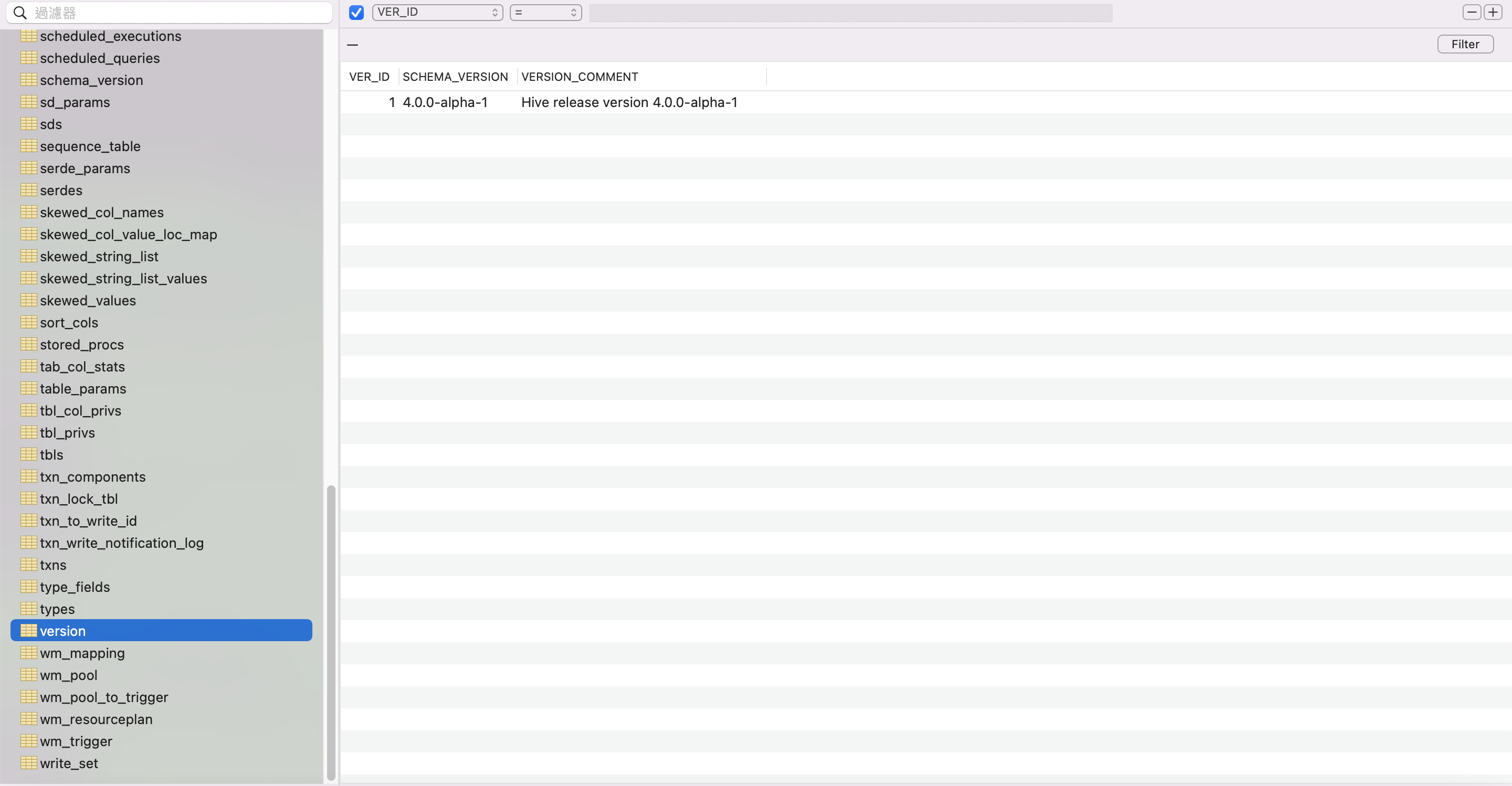

Initialization script completed以下爲 MySQL 資料庫截圖,使用 Hive Schema Version 4.0.0 就有多一個 catalogs 的層級!

從 Spark 3.3.0 連結 Hive Metastore 2.3.9 時 Spark Configurations 的設定

目前再使用 Spark SQL 的時候,現在有比較多的應用都是使用 Deltalake

spark.ql.extensions io.delta.sql.DeltaSparkSessionExtension

spark.sql.catalog.spark_catalog org.apache.spark.sql.delta.catalog.DeltaCatalog

spark.jars.packages io.delta:delta-core_2.12:2.1.0本範例是用 Spark 3.3.0,在 spark-hive_2.12-3.3.0.jar 裡面的是 metastore.version 是 2.3.9,所以如果 Hive Metastore 用的是 2.3.0 版本的話就可以直接連結。

spark.sql.hive.metastore.version 2.3.9

spark.hadoop.datanucleus.autoCreateSchema true

spark.hadoop.datanucleus.fixedDatastore false

spark.hadoop.datanucleus.schema.autoCreateTables true

spark.databricks.delta.schema.autoMerge.enabled true如果要利用 Spark 去連結 Hive Metastore 就需要以下的設定:

spark.hadoop.javax.jdo.option.ConnectionUserName admin@metastoreserver

spark.hadoop.javax.jdo.option.ConnectionURL jdbc:mysql://metastoreserver.mysql.database.azure.com:3306/metastore?createDatabaseIfNotExist=true&serverTimezone=UTC

spark.hadoop.javax.jdo.option.ConnectionPassword password

spark.hadoop.javax.jdo.option.ConnectionDriverName com.mysql.cj.jdbc.Driver

spark.sql.warehouse.dir abfss://container@storageaccount.dfs.core.windows.net/user/hive/warehouse/更進一步針對這些 Spark Configuration 的設定細節可以參考 https://spark.apache.org/docs/latest/sql-data-sources-hive-tables.html

從 Spark 3.3.0 連結 Hive Metastore 3.1.3 時的設定

如果是想要從 Spark 3.3.0 去連結 Hive Metastore 2.3.0 以上的版本的話,則需要相對多的設定,以下是額外的其他條件列表:

- 安裝 apache-hive-3.1.3-bin.tar.gz

- 設定在 apache-hive-3.1.3 裡面的 hive-site.xml

- 在 spark-defaults.conf 設定以下的參數

- 在 /opt/hive/lib 放置 mysql-connector-java-8.0.30.jar

- 在 /opt/hive/lib 放置 commons-collections-3.2.2.jar

spark.sql.hive.metastore.version 3.1.2

spark.sql.hive.metastore.jars path

spark.sql.hive.metastore.jars.path file:///opt/hive/lib/*.jar測試部屬時出現以下錯誤,透過上方第 5 點可以解決:

2023-04-12T01:29:05,306 ERROR [main] org.apache.hadoop.hive.metastore.RetryingHMSHandler - java.lang.NoClassDefFoundError: org/apache/commons/collections/CollectionUtils

at org.apache.hadoop.hive.metastore.ObjectStore.grantPrivileges(ObjectStore.java:5745)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.RawStoreProxy.invoke(RawStoreProxy.java:97)

at com.sun.proxy.$Proxy39.grantPrivileges(Unknown Source)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultRoles_core(HiveMetaStore.java:830)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultRoles(HiveMetaStore.java:796)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.init(HiveMetaStore.java:541)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invokeInternal(RetryingHMSHandler.java:147)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invoke(RetryingHMSHandler.java:108)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.<init>(RetryingHMSHandler.java:80)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.getProxy(RetryingHMSHandler.java:93)

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:8678)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:169)

at org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient.<init>(SessionHiveMetaStoreClient.java:94)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.hive.metastore.utils.JavaUtils.newInstance(JavaUtils.java:84)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.<init>(RetryingMetaStoreClient.java:95)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:148)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:119)

at org.apache.hadoop.hive.ql.metadata.Hive.createMetaStoreClient(Hive.java:4306)

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:4374)

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:4354)

at org.apache.hadoop.hive.ql.metadata.Hive.getDatabase(Hive.java:1662)

at org.apache.hadoop.hive.ql.metadata.Hive.databaseExists(Hive.java:1651)

at org.apache.spark.sql.hive.client.Shim_v0_12.databaseExists(HiveShim.scala:609)

at org.apache.spark.sql.hive.client.HiveClientImpl.$anonfun$databaseExists$1(HiveClientImpl.scala:394)

at scala.runtime.java8.JFunction0$mcZ$sp.apply(JFunction0$mcZ$sp.java:23)

at org.apache.spark.sql.hive.client.HiveClientImpl.$anonfun$withHiveState$1(HiveClientImpl.scala:294)

at org.apache.spark.sql.hive.client.HiveClientImpl.liftedTree1$1(HiveClientImpl.scala:225)

at org.apache.spark.sql.hive.client.HiveClientImpl.retryLocked(HiveClientImpl.scala:224)

at org.apache.spark.sql.hive.client.HiveClientImpl.withHiveState(HiveClientImpl.scala:274)

at org.apache.spark.sql.hive.client.HiveClientImpl.databaseExists(HiveClientImpl.scala:394)

at org.apache.spark.sql.hive.HiveExternalCatalog.$anonfun$databaseExists$1(HiveExternalCatalog.scala:223)

at scala.runtime.java8.JFunction0$mcZ$sp.apply(JFunction0$mcZ$sp.java:23)

at org.apache.spark.sql.hive.HiveExternalCatalog.withClient(HiveExternalCatalog.scala:101)

at org.apache.spark.sql.hive.HiveExternalCatalog.databaseExists(HiveExternalCatalog.scala:223)

at org.apache.spark.sql.internal.SharedState.externalCatalog$lzycompute(SharedState.scala:150)

at org.apache.spark.sql.internal.SharedState.externalCatalog(SharedState.scala:140)

at org.apache.spark.sql.internal.SharedState.globalTempViewManager$lzycompute(SharedState.scala:170)

at org.apache.spark.sql.internal.SharedState.globalTempViewManager(SharedState.scala:168)

at org.apache.spark.sql.hive.HiveSessionStateBuilder.$anonfun$catalog$2(HiveSessionStateBuilder.scala:70)

at org.apache.spark.sql.catalyst.catalog.SessionCatalog.globalTempViewManager$lzycompute(SessionCatalog.scala:122)

at org.apache.spark.sql.catalyst.catalog.SessionCatalog.globalTempViewManager(SessionCatalog.scala:122)

at org.apache.spark.sql.catalyst.catalog.SessionCatalog.listTables(SessionCatalog.scala:1031)

at org.apache.spark.sql.catalyst.catalog.SessionCatalog.listTables(SessionCatalog.scala:1017)

at org.apache.spark.sql.catalyst.catalog.SessionCatalog.listTables(SessionCatalog.scala:1009)

at org.apache.spark.sql.execution.datasources.v2.V2SessionCatalog.listTables(V2SessionCatalog.scala:57)

at org.apache.spark.sql.connector.catalog.DelegatingCatalogExtension.listTables(DelegatingCatalogExtension.java:61)

at org.apache.spark.sql.execution.datasources.v2.ShowTablesExec.run(ShowTablesExec.scala:40)

at org.apache.spark.sql.execution.datasources.v2.V2CommandExec.result$lzycompute(V2CommandExec.scala:43)

at org.apache.spark.sql.execution.datasources.v2.V2CommandExec.result(V2CommandExec.scala:43)

at org.apache.spark.sql.execution.datasources.v2.V2CommandExec.executeCollect(V2CommandExec.scala:49)

at org.apache.spark.sql.execution.QueryExecution$$anonfun$eagerlyExecuteCommands$1.$anonfun$applyOrElse$1(QueryExecution.scala:98)

at org.apache.spark.sql.execution.SQLExecution$.$anonfun$withNewExecutionId$6(SQLExecution.scala:109)

at org.apache.spark.sql.execution.SQLExecution$.withSQLConfPropagated(SQLExecution.scala:169)

at org.apache.spark.sql.execution.SQLExecution$.$anonfun$withNewExecutionId$1(SQLExecution.scala:95)

at org.apache.spark.sql.SparkSession.withActive(SparkSession.scala:779)

at org.apache.spark.sql.execution.SQLExecution$.withNewExecutionId(SQLExecution.scala:64)

at org.apache.spark.sql.execution.QueryExecution$$anonfun$eagerlyExecuteCommands$1.applyOrElse(QueryExecution.scala:98)

at org.apache.spark.sql.execution.QueryExecution$$anonfun$eagerlyExecuteCommands$1.applyOrElse(QueryExecution.scala:94)

at org.apache.spark.sql.catalyst.trees.TreeNode.$anonfun$transformDownWithPruning$1(TreeNode.scala:584)

at org.apache.spark.sql.catalyst.trees.CurrentOrigin$.withOrigin(TreeNode.scala:176)

at org.apache.spark.sql.catalyst.trees.TreeNode.transformDownWithPruning(TreeNode.scala:584)

at org.apache.spark.sql.catalyst.plans.logical.LogicalPlan.org$apache$spark$sql$catalyst$plans$logical$AnalysisHelper$$super$transformDownWithPruning(LogicalPlan.scala:30)

at org.apache.spark.sql.catalyst.plans.logical.AnalysisHelper.transformDownWithPruning(AnalysisHelper.scala:267)

at org.apache.spark.sql.catalyst.plans.logical.AnalysisHelper.transformDownWithPruning$(AnalysisHelper.scala:263)

at org.apache.spark.sql.catalyst.plans.logical.LogicalPlan.transformDownWithPruning(LogicalPlan.scala:30)

at org.apache.spark.sql.catalyst.plans.logical.LogicalPlan.transformDownWithPruning(LogicalPlan.scala:30)

at org.apache.spark.sql.catalyst.trees.TreeNode.transformDown(TreeNode.scala:560)

at org.apache.spark.sql.execution.QueryExecution.eagerlyExecuteCommands(QueryExecution.scala:94)

at org.apache.spark.sql.execution.QueryExecution.commandExecuted$lzycompute(QueryExecution.scala:81)

at org.apache.spark.sql.execution.QueryExecution.commandExecuted(QueryExecution.scala:79)

at org.apache.spark.sql.Dataset.<init>(Dataset.scala:220)

at org.apache.spark.sql.Dataset$.$anonfun$ofRows$2(Dataset.scala:100)

at org.apache.spark.sql.SparkSession.withActive(SparkSession.scala:779)

at org.apache.spark.sql.Dataset$.ofRows(Dataset.scala:97)

at org.apache.spark.sql.SparkSession.$anonfun$sql$1(SparkSession.scala:622)

at org.apache.spark.sql.SparkSession.withActive(SparkSession.scala:779)

at org.apache.spark.sql.SparkSession.sql(SparkSession.scala:617)

at $line14.$read$$iw$$iw$$iw$$iw$$iw$$iw$$iw$$iw.<init>(<console>:23)

at $line14.$read$$iw$$iw$$iw$$iw$$iw$$iw$$iw.<init>(<console>:27)

at $line14.$read$$iw$$iw$$iw$$iw$$iw$$iw.<init>(<console>:29)

at $line14.$read$$iw$$iw$$iw$$iw$$iw.<init>(<console>:31)

at $line14.$read$$iw$$iw$$iw$$iw.<init>(<console>:33)

at $line14.$read$$iw$$iw$$iw.<init>(<console>:35)

at $line14.$read$$iw$$iw.<init>(<console>:37)

at $line14.$read$$iw.<init>(<console>:39)

at $line14.$read.<init>(<console>:41)

at $line14.$read$.<init>(<console>:45)

at $line14.$read$.<clinit>(<console>)

at $line14.$eval$.$print$lzycompute(<console>:7)

at $line14.$eval$.$print(<console>:6)

at $line14.$eval.$print(<console>)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at scala.tools.nsc.interpreter.IMain$ReadEvalPrint.call(IMain.scala:747)

at scala.tools.nsc.interpreter.IMain$Request.loadAndRun(IMain.scala:1020)

at scala.tools.nsc.interpreter.IMain.$anonfun$interpret$1(IMain.scala:568)

at scala.reflect.internal.util.ScalaClassLoader.asContext(ScalaClassLoader.scala:36)

at scala.reflect.internal.util.ScalaClassLoader.asContext$(ScalaClassLoader.scala:116)

at scala.reflect.internal.util.AbstractFileClassLoader.asContext(AbstractFileClassLoader.scala:41)

at scala.tools.nsc.interpreter.IMain.loadAndRunReq$1(IMain.scala:567)

at scala.tools.nsc.interpreter.IMain.interpret(IMain.scala:594)

at scala.tools.nsc.interpreter.IMain.interpret(IMain.scala:564)

at scala.tools.nsc.interpreter.ILoop.interpretStartingWith(ILoop.scala:865)

at scala.tools.nsc.interpreter.ILoop.command(ILoop.scala:733)

at scala.tools.nsc.interpreter.ILoop.processLine(ILoop.scala:435)

at scala.tools.nsc.interpreter.ILoop.loop(ILoop.scala:456)

at org.apache.spark.repl.SparkILoop.process(SparkILoop.scala:239)

at org.apache.spark.repl.Main$.doMain(Main.scala:78)

at org.apache.spark.repl.Main$.main(Main.scala:58)

at org.apache.spark.repl.Main.main(Main.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.JavaMainApplication.start(SparkApplication.scala:52)

at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:958)

at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:180)

at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:203)

at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:90)

at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:1046)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:1055)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

Caused by: java.lang.ClassNotFoundException: org.apache.commons.collections.CollectionUtils

at java.net.URLClassLoader.findClass(URLClassLoader.java:387)

at java.lang.ClassLoader.loadClass(ClassLoader.java:418)

at org.apache.spark.sql.hive.client.IsolatedClientLoader$$anon$1.doLoadClass(IsolatedClientLoader.scala:269)

at org.apache.spark.sql.hive.client.IsolatedClientLoader$$anon$1.loadClass(IsolatedClientLoader.scala:258)

at java.lang.ClassLoader.loadClass(ClassLoader.java:351)

... 136 more

2023-04-12T01:29:05,307 ERROR [main] org.apache.hadoop.hive.metastore.RetryingHMSHandler - HMSHandler Fatal error: java.lang.NoClassDefFoundError: org/apache/commons/collections/CollectionUtils

at org.apache.hadoop.hive.metastore.ObjectStore.grantPrivileges(ObjectStore.java:5745)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.RawStoreProxy.invoke(RawStoreProxy.java:97)

at com.sun.proxy.$Proxy39.grantPrivileges(Unknown Source)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultRoles_core(HiveMetaStore.java:830)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultRoles(HiveMetaStore.java:796)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.init(HiveMetaStore.java:541)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invokeInternal(RetryingHMSHandler.java:147)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invoke(RetryingHMSHandler.java:108)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.<init>(RetryingHMSHandler.java:80)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.getProxy(RetryingHMSHandler.java:93)

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:8678)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:169)

at org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient.<init>(SessionHiveMetaStoreClient.java:94)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.hive.metastore.utils.JavaUtils.newInstance(JavaUtils.java:84)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.<init>(RetryingMetaStoreClient.java:95)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:148)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:119)

at org.apache.hadoop.hive.ql.metadata.Hive.createMetaStoreClient(Hive.java:4306)

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:4374)

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:4354)

at org.apache.hadoop.hive.ql.metadata.Hive.getDatabase(Hive.java:1662)

at org.apache.hadoop.hive.ql.metadata.Hive.databaseExists(Hive.java:1651)

at org.apache.spark.sql.hive.client.Shim_v0_12.databaseExists(HiveShim.scala:609)

at org.apache.spark.sql.hive.client.HiveClientImpl.$anonfun$databaseExists$1(HiveClientImpl.scala:394)

at scala.runtime.java8.JFunction0$mcZ$sp.apply(JFunction0$mcZ$sp.java:23)

at org.apache.spark.sql.hive.client.HiveClientImpl.$anonfun$withHiveState$1(HiveClientImpl.scala:294)

at org.apache.spark.sql.hive.client.HiveClientImpl.liftedTree1$1(HiveClientImpl.scala:225)

at org.apache.spark.sql.hive.client.HiveClientImpl.retryLocked(HiveClientImpl.scala:224)

at org.apache.spark.sql.hive.client.HiveClientImpl.withHiveState(HiveClientImpl.scala:274)

at org.apache.spark.sql.hive.client.HiveClientImpl.databaseExists(HiveClientImpl.scala:394)

at org.apache.spark.sql.hive.HiveExternalCatalog.$anonfun$databaseExists$1(HiveExternalCatalog.scala:223)

at scala.runtime.java8.JFunction0$mcZ$sp.apply(JFunction0$mcZ$sp.java:23)

at org.apache.spark.sql.hive.HiveExternalCatalog.withClient(HiveExternalCatalog.scala:101)

at org.apache.spark.sql.hive.HiveExternalCatalog.databaseExists(HiveExternalCatalog.scala:223)

at org.apache.spark.sql.internal.SharedState.externalCatalog$lzycompute(SharedState.scala:150)

at org.apache.spark.sql.internal.SharedState.externalCatalog(SharedState.scala:140)

at org.apache.spark.sql.internal.SharedState.globalTempViewManager$lzycompute(SharedState.scala:170)

at org.apache.spark.sql.internal.SharedState.globalTempViewManager(SharedState.scala:168)

at org.apache.spark.sql.hive.HiveSessionStateBuilder.$anonfun$catalog$2(HiveSessionStateBuilder.scala:70)

at org.apache.spark.sql.catalyst.catalog.SessionCatalog.globalTempViewManager$lzycompute(SessionCatalog.scala:122)

at org.apache.spark.sql.catalyst.catalog.SessionCatalog.globalTempViewManager(SessionCatalog.scala:122)

at org.apache.spark.sql.catalyst.catalog.SessionCatalog.listTables(SessionCatalog.scala:1031)

at org.apache.spark.sql.catalyst.catalog.SessionCatalog.listTables(SessionCatalog.scala:1017)

at org.apache.spark.sql.catalyst.catalog.SessionCatalog.listTables(SessionCatalog.scala:1009)

at org.apache.spark.sql.execution.datasources.v2.V2SessionCatalog.listTables(V2SessionCatalog.scala:57)

at org.apache.spark.sql.connector.catalog.DelegatingCatalogExtension.listTables(DelegatingCatalogExtension.java:61)

at org.apache.spark.sql.execution.datasources.v2.ShowTablesExec.run(ShowTablesExec.scala:40)

at org.apache.spark.sql.execution.datasources.v2.V2CommandExec.result$lzycompute(V2CommandExec.scala:43)

at org.apache.spark.sql.execution.datasources.v2.V2CommandExec.result(V2CommandExec.scala:43)

at org.apache.spark.sql.execution.datasources.v2.V2CommandExec.executeCollect(V2CommandExec.scala:49)

at org.apache.spark.sql.execution.QueryExecution$$anonfun$eagerlyExecuteCommands$1.$anonfun$applyOrElse$1(QueryExecution.scala:98)

at org.apache.spark.sql.execution.SQLExecution$.$anonfun$withNewExecutionId$6(SQLExecution.scala:109)

at org.apache.spark.sql.execution.SQLExecution$.withSQLConfPropagated(SQLExecution.scala:169)

at org.apache.spark.sql.execution.SQLExecution$.$anonfun$withNewExecutionId$1(SQLExecution.scala:95)

at org.apache.spark.sql.SparkSession.withActive(SparkSession.scala:779)

at org.apache.spark.sql.execution.SQLExecution$.withNewExecutionId(SQLExecution.scala:64)

at org.apache.spark.sql.execution.QueryExecution$$anonfun$eagerlyExecuteCommands$1.applyOrElse(QueryExecution.scala:98)

at org.apache.spark.sql.execution.QueryExecution$$anonfun$eagerlyExecuteCommands$1.applyOrElse(QueryExecution.scala:94)

at org.apache.spark.sql.catalyst.trees.TreeNode.$anonfun$transformDownWithPruning$1(TreeNode.scala:584)

at org.apache.spark.sql.catalyst.trees.CurrentOrigin$.withOrigin(TreeNode.scala:176)

at org.apache.spark.sql.catalyst.trees.TreeNode.transformDownWithPruning(TreeNode.scala:584)

at org.apache.spark.sql.catalyst.plans.logical.LogicalPlan.org$apache$spark$sql$catalyst$plans$logical$AnalysisHelper$$super$transformDownWithPruning(LogicalPlan.scala:30)

at org.apache.spark.sql.catalyst.plans.logical.AnalysisHelper.transformDownWithPruning(AnalysisHelper.scala:267)

at org.apache.spark.sql.catalyst.plans.logical.AnalysisHelper.transformDownWithPruning$(AnalysisHelper.scala:263)

at org.apache.spark.sql.catalyst.plans.logical.LogicalPlan.transformDownWithPruning(LogicalPlan.scala:30)

at org.apache.spark.sql.catalyst.plans.logical.LogicalPlan.transformDownWithPruning(LogicalPlan.scala:30)

at org.apache.spark.sql.catalyst.trees.TreeNode.transformDown(TreeNode.scala:560)

at org.apache.spark.sql.execution.QueryExecution.eagerlyExecuteCommands(QueryExecution.scala:94)

at org.apache.spark.sql.execution.QueryExecution.commandExecuted$lzycompute(QueryExecution.scala:81)

at org.apache.spark.sql.execution.QueryExecution.commandExecuted(QueryExecution.scala:79)

at org.apache.spark.sql.Dataset.<init>(Dataset.scala:220)

at org.apache.spark.sql.Dataset$.$anonfun$ofRows$2(Dataset.scala:100)

at org.apache.spark.sql.SparkSession.withActive(SparkSession.scala:779)

at org.apache.spark.sql.Dataset$.ofRows(Dataset.scala:97)

at org.apache.spark.sql.SparkSession.$anonfun$sql$1(SparkSession.scala:622)

at org.apache.spark.sql.SparkSession.withActive(SparkSession.scala:779)

at org.apache.spark.sql.SparkSession.sql(SparkSession.scala:617)

at $line14.$read$$iw$$iw$$iw$$iw$$iw$$iw$$iw$$iw.<init>(<console>:23)

at $line14.$read$$iw$$iw$$iw$$iw$$iw$$iw$$iw.<init>(<console>:27)

at $line14.$read$$iw$$iw$$iw$$iw$$iw$$iw.<init>(<console>:29)

at $line14.$read$$iw$$iw$$iw$$iw$$iw.<init>(<console>:31)

at $line14.$read$$iw$$iw$$iw$$iw.<init>(<console>:33)

at $line14.$read$$iw$$iw$$iw.<init>(<console>:35)

at $line14.$read$$iw$$iw.<init>(<console>:37)

at $line14.$read$$iw.<init>(<console>:39)

at $line14.$read.<init>(<console>:41)

at $line14.$read$.<init>(<console>:45)

at $line14.$read$.<clinit>(<console>)

at $line14.$eval$.$print$lzycompute(<console>:7)

at $line14.$eval$.$print(<console>:6)

at $line14.$eval.$print(<console>)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at scala.tools.nsc.interpreter.IMain$ReadEvalPrint.call(IMain.scala:747)

at scala.tools.nsc.interpreter.IMain$Request.loadAndRun(IMain.scala:1020)

at scala.tools.nsc.interpreter.IMain.$anonfun$interpret$1(IMain.scala:568)

at scala.reflect.internal.util.ScalaClassLoader.asContext(ScalaClassLoader.scala:36)

at scala.reflect.internal.util.ScalaClassLoader.asContext$(ScalaClassLoader.scala:116)

at scala.reflect.internal.util.AbstractFileClassLoader.asContext(AbstractFileClassLoader.scala:41)

at scala.tools.nsc.interpreter.IMain.loadAndRunReq$1(IMain.scala:567)

at scala.tools.nsc.interpreter.IMain.interpret(IMain.scala:594)

at scala.tools.nsc.interpreter.IMain.interpret(IMain.scala:564)

at scala.tools.nsc.interpreter.ILoop.interpretStartingWith(ILoop.scala:865)

at scala.tools.nsc.interpreter.ILoop.command(ILoop.scala:733)

at scala.tools.nsc.interpreter.ILoop.processLine(ILoop.scala:435)

at scala.tools.nsc.interpreter.ILoop.loop(ILoop.scala:456)

at org.apache.spark.repl.SparkILoop.process(SparkILoop.scala:239)

at org.apache.spark.repl.Main$.doMain(Main.scala:78)

at org.apache.spark.repl.Main$.main(Main.scala:58)

at org.apache.spark.repl.Main.main(Main.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.JavaMainApplication.start(SparkApplication.scala:52)

at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:958)

at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:180)

at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:203)

at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:90)

at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:1046)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:1055)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

Caused by: java.lang.ClassNotFoundException: org.apache.commons.collections.CollectionUtils

at java.net.URLClassLoader.findClass(URLClassLoader.java:387)

at java.lang.ClassLoader.loadClass(ClassLoader.java:418)

at org.apache.spark.sql.hive.client.IsolatedClientLoader$$anon$1.doLoadClass(IsolatedClientLoader.scala:269)

at org.apache.spark.sql.hive.client.IsolatedClientLoader$$anon$1.loadClass(IsolatedClientLoader.scala:258)

at java.lang.ClassLoader.loadClass(ClassLoader.java:351)

... 136 more

org.apache.spark.sql.AnalysisException: org.apache.hadoop.hive.ql.metadata.HiveException: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient

at org.apache.spark.sql.hive.HiveExternalCatalog.withClient(HiveExternalCatalog.scala:110)

at org.apache.spark.sql.hive.HiveExternalCatalog.databaseExists(HiveExternalCatalog.scala:223)

at org.apache.spark.sql.internal.SharedState.externalCatalog$lzycompute(SharedState.scala:150)

at org.apache.spark.sql.internal.SharedState.externalCatalog(SharedState.scala:140)

at org.apache.spark.sql.internal.SharedState.globalTempViewManager$lzycompute(SharedState.scala:170)

at org.apache.spark.sql.internal.SharedState.globalTempViewManager(SharedState.scala:168)

at org.apache.spark.sql.hive.HiveSessionStateBuilder.$anonfun$catalog$2(HiveSessionStateBuilder.scala:70)

at org.apache.spark.sql.catalyst.catalog.SessionCatalog.globalTempViewManager$lzycompute(SessionCatalog.scala:122)

at org.apache.spark.sql.catalyst.catalog.SessionCatalog.globalTempViewManager(SessionCatalog.scala:122)

at org.apache.spark.sql.catalyst.catalog.SessionCatalog.listTables(SessionCatalog.scala:1031)

at org.apache.spark.sql.catalyst.catalog.SessionCatalog.listTables(SessionCatalog.scala:1017)

at org.apache.spark.sql.catalyst.catalog.SessionCatalog.listTables(SessionCatalog.scala:1009)

at org.apache.spark.sql.execution.datasources.v2.V2SessionCatalog.listTables(V2SessionCatalog.scala:57)

at org.apache.spark.sql.connector.catalog.DelegatingCatalogExtension.listTables(DelegatingCatalogExtension.java:61)

at org.apache.spark.sql.execution.datasources.v2.ShowTablesExec.run(ShowTablesExec.scala:40)

at org.apache.spark.sql.execution.datasources.v2.V2CommandExec.result$lzycompute(V2CommandExec.scala:43)

at org.apache.spark.sql.execution.datasources.v2.V2CommandExec.result(V2CommandExec.scala:43)

at org.apache.spark.sql.execution.datasources.v2.V2CommandExec.executeCollect(V2CommandExec.scala:49)

at org.apache.spark.sql.execution.QueryExecution$$anonfun$eagerlyExecuteCommands$1.$anonfun$applyOrElse$1(QueryExecution.scala:98)

at org.apache.spark.sql.execution.SQLExecution$.$anonfun$withNewExecutionId$6(SQLExecution.scala:109)

at org.apache.spark.sql.execution.SQLExecution$.withSQLConfPropagated(SQLExecution.scala:169)

at org.apache.spark.sql.execution.SQLExecution$.$anonfun$withNewExecutionId$1(SQLExecution.scala:95)

at org.apache.spark.sql.SparkSession.withActive(SparkSession.scala:779)

at org.apache.spark.sql.execution.SQLExecution$.withNewExecutionId(SQLExecution.scala:64)

at org.apache.spark.sql.execution.QueryExecution$$anonfun$eagerlyExecuteCommands$1.applyOrElse(QueryExecution.scala:98)

at org.apache.spark.sql.execution.QueryExecution$$anonfun$eagerlyExecuteCommands$1.applyOrElse(QueryExecution.scala:94)

at org.apache.spark.sql.catalyst.trees.TreeNode.$anonfun$transformDownWithPruning$1(TreeNode.scala:584)

at org.apache.spark.sql.catalyst.trees.CurrentOrigin$.withOrigin(TreeNode.scala:176)

at org.apache.spark.sql.catalyst.trees.TreeNode.transformDownWithPruning(TreeNode.scala:584)

at org.apache.spark.sql.catalyst.plans.logical.LogicalPlan.org$apache$spark$sql$catalyst$plans$logical$AnalysisHelper$$super$transformDownWithPruning(LogicalPlan.scala:30)

at org.apache.spark.sql.catalyst.plans.logical.AnalysisHelper.transformDownWithPruning(AnalysisHelper.scala:267)

at org.apache.spark.sql.catalyst.plans.logical.AnalysisHelper.transformDownWithPruning$(AnalysisHelper.scala:263)

at org.apache.spark.sql.catalyst.plans.logical.LogicalPlan.transformDownWithPruning(LogicalPlan.scala:30)

at org.apache.spark.sql.catalyst.plans.logical.LogicalPlan.transformDownWithPruning(LogicalPlan.scala:30)

at org.apache.spark.sql.catalyst.trees.TreeNode.transformDown(TreeNode.scala:560)

at org.apache.spark.sql.execution.QueryExecution.eagerlyExecuteCommands(QueryExecution.scala:94)

at org.apache.spark.sql.execution.QueryExecution.commandExecuted$lzycompute(QueryExecution.scala:81)

at org.apache.spark.sql.execution.QueryExecution.commandExecuted(QueryExecution.scala:79)

at org.apache.spark.sql.Dataset.<init>(Dataset.scala:220)

at org.apache.spark.sql.Dataset$.$anonfun$ofRows$2(Dataset.scala:100)

at org.apache.spark.sql.SparkSession.withActive(SparkSession.scala:779)

at org.apache.spark.sql.Dataset$.ofRows(Dataset.scala:97)

at org.apache.spark.sql.SparkSession.$anonfun$sql$1(SparkSession.scala:622)

at org.apache.spark.sql.SparkSession.withActive(SparkSession.scala:779)

at org.apache.spark.sql.SparkSession.sql(SparkSession.scala:617)

... 47 elided

Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient

at org.apache.hadoop.hive.ql.metadata.Hive.getDatabase(Hive.java:1666)

at org.apache.hadoop.hive.ql.metadata.Hive.databaseExists(Hive.java:1651)

at org.apache.spark.sql.hive.client.Shim_v0_12.databaseExists(HiveShim.scala:609)

at org.apache.spark.sql.hive.client.HiveClientImpl.$anonfun$databaseExists$1(HiveClientImpl.scala:394)

at scala.runtime.java8.JFunction0$mcZ$sp.apply(JFunction0$mcZ$sp.java:23)

at org.apache.spark.sql.hive.client.HiveClientImpl.$anonfun$withHiveState$1(HiveClientImpl.scala:294)

at org.apache.spark.sql.hive.client.HiveClientImpl.liftedTree1$1(HiveClientImpl.scala:225)

at org.apache.spark.sql.hive.client.HiveClientImpl.retryLocked(HiveClientImpl.scala:224)

at org.apache.spark.sql.hive.client.HiveClientImpl.withHiveState(HiveClientImpl.scala:274)

at org.apache.spark.sql.hive.client.HiveClientImpl.databaseExists(HiveClientImpl.scala:394)

at org.apache.spark.sql.hive.HiveExternalCatalog.$anonfun$databaseExists$1(HiveExternalCatalog.scala:223)

at scala.runtime.java8.JFunction0$mcZ$sp.apply(JFunction0$mcZ$sp.java:23)

at org.apache.spark.sql.hive.HiveExternalCatalog.withClient(HiveExternalCatalog.scala:101)

... 91 more

Caused by: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient

at org.apache.hadoop.hive.metastore.utils.JavaUtils.newInstance(JavaUtils.java:86)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.<init>(RetryingMetaStoreClient.java:95)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:148)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:119)

at org.apache.hadoop.hive.ql.metadata.Hive.createMetaStoreClient(Hive.java:4306)

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:4374)

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:4354)

at org.apache.hadoop.hive.ql.metadata.Hive.getDatabase(Hive.java:1662)

... 103 more

Caused by: java.lang.reflect.InvocationTargetException: org.apache.hadoop.hive.metastore.api.MetaException: org/apache/commons/collections/CollectionUtils

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.hive.metastore.utils.JavaUtils.newInstance(JavaUtils.java:84)

... 110 more

Caused by: org.apache.hadoop.hive.metastore.api.MetaException: org/apache/commons/collections/CollectionUtils

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.<init>(RetryingHMSHandler.java:84)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.getProxy(RetryingHMSHandler.java:93)

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:8678)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:169)

at org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient.<init>(SessionHiveMetaStoreClient.java:94)

... 115 more

Caused by: java.lang.NoClassDefFoundError: org/apache/commons/collections/CollectionUtils

at org.apache.hadoop.hive.metastore.ObjectStore.grantPrivileges(ObjectStore.java:5745)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.RawStoreProxy.invoke(RawStoreProxy.java:97)

at com.sun.proxy.$Proxy39.grantPrivileges(Unknown Source)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultRoles_core(HiveMetaStore.java:830)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultRoles(HiveMetaStore.java:796)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.init(HiveMetaStore.java:541)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invokeInternal(RetryingHMSHandler.java:147)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invoke(RetryingHMSHandler.java:108)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.<init>(RetryingHMSHandler.java:80)

... 119 more

Caused by: java.lang.ClassNotFoundException: org.apache.commons.collections.CollectionUtils

at java.net.URLClassLoader.findClass(URLClassLoader.java:387)

at java.lang.ClassLoader.loadClass(ClassLoader.java:418)

at org.apache.spark.sql.hive.client.IsolatedClientLoader$$anon$1.doLoadClass(IsolatedClientLoader.scala:269)

at org.apache.spark.sql.hive.client.IsolatedClientLoader$$anon$1.loadClass(IsolatedClientLoader.scala:258)

at java.lang.ClassLoader.loadClass(ClassLoader.java:351)

... 136 more成功從 Spark 3.3.0 存取 Hive Metastore 3.1.3

scala> spark.sql("SHOW TABLES").show()

2023-04-12T01:34:57,414 INFO [main] org.apache.hadoop.hive.conf.HiveConf - Found configuration file file:/opt/hive/conf/hive-site.xml

Hive Session ID = 95f985d7-ad2b-4379-9853-c23e867bbb5e

2023-04-12T01:34:57,611 INFO [main] SessionState - Hive Session ID = 95f985d7-ad2b-4379-9853-c23e867bbb5e

2023-04-12T01:34:57,880 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore - 0: Opening raw store with implementation class:org.apache.hadoop.hive.metastore.ObjectStore

2023-04-12T01:34:57,905 WARN [main] org.apache.hadoop.hive.metastore.ObjectStore - datanucleus.autoStartMechanismMode is set to unsupported value null . Setting it to value: ignored

2023-04-12T01:34:57,905 INFO [main] org.apache.hadoop.hive.metastore.ObjectStore - ObjectStore, initialize called

2023-04-12T01:34:57,905 INFO [main] org.apache.hadoop.hive.metastore.conf.MetastoreConf - Unable to find config file hive-site.xml

2023-04-12T01:34:57,905 INFO [main] org.apache.hadoop.hive.metastore.conf.MetastoreConf - Found configuration file null

2023-04-12T01:34:57,905 INFO [main] org.apache.hadoop.hive.metastore.conf.MetastoreConf - Unable to find config file hivemetastore-site.xml

2023-04-12T01:34:57,906 INFO [main] org.apache.hadoop.hive.metastore.conf.MetastoreConf - Found configuration file null

2023-04-12T01:34:57,906 INFO [main] org.apache.hadoop.hive.metastore.conf.MetastoreConf - Unable to find config file metastore-site.xml

2023-04-12T01:34:57,906 INFO [main] org.apache.hadoop.hive.metastore.conf.MetastoreConf - Found configuration file null

2023-04-12T01:34:58,015 INFO [main] DataNucleus.Persistence - Property datanucleus.cache.level2 unknown - will be ignored

2023-04-12T01:34:58,131 INFO [main] com.zaxxer.hikari.HikariDataSource - HikariPool-1 - Starting...

2023-04-12T01:34:58,419 INFO [main] com.zaxxer.hikari.HikariDataSource - HikariPool-1 - Start completed.

2023-04-12T01:34:58,441 INFO [main] com.zaxxer.hikari.HikariDataSource - HikariPool-2 - Starting...

2023-04-12T01:34:58,506 INFO [main] com.zaxxer.hikari.HikariDataSource - HikariPool-2 - Start completed.

2023-04-12T01:34:58,564 INFO [main] org.apache.hadoop.hive.metastore.ObjectStore - Setting MetaStore object pin classes with hive.metastore.cache.pinobjtypes="Table,StorageDescriptor,SerDeInfo,Partition,Database,Type,FieldSchema,Order"

2023-04-12T01:34:58,676 INFO [main] org.apache.hadoop.hive.metastore.MetaStoreDirectSql - Using direct SQL, underlying DB is MYSQL

2023-04-12T01:34:58,677 INFO [main] org.apache.hadoop.hive.metastore.ObjectStore - Initialized ObjectStore

2023-04-12T01:34:58,817 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2023-04-12T01:34:58,818 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2023-04-12T01:34:58,818 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2023-04-12T01:34:58,819 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2023-04-12T01:34:58,819 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2023-04-12T01:34:58,819 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2023-04-12T01:34:59,093 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2023-04-12T01:34:59,093 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2023-04-12T01:34:59,094 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2023-04-12T01:34:59,094 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2023-04-12T01:34:59,094 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2023-04-12T01:34:59,094 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2023-04-12T01:34:59,741 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore - Added admin role in metastore

2023-04-12T01:34:59,749 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore - Added public role in metastore

2023-04-12T01:34:59,823 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore - No user is added in admin role, since config is empty

2023-04-12T01:34:59,929 INFO [main] org.apache.hadoop.hive.metastore.RetryingMetaStoreClient - RetryingMetaStoreClient proxy=class org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient ugi=root (auth:SIMPLE) retries=1 delay=1 lifetime=0

2023-04-12T01:34:59,950 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore - 0: get_database: @hive#default

2023-04-12T01:34:59,951 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore.audit - ugi=root ip=unknown-ip-addr cmd=get_database: @hive#default

2023-04-12T01:34:59,964 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore - 0: get_database: @hive#global_temp

2023-04-12T01:34:59,964 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore.audit - ugi=root ip=unknown-ip-addr cmd=get_database: @hive#global_temp

2023-04-12T01:34:59,973 WARN [main] org.apache.hadoop.hive.metastore.ObjectStore - Failed to get database hive.global_temp, returning NoSuchObjectException

2023-04-12T01:34:59,975 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore - 0: get_database: @hive#default

2023-04-12T01:34:59,975 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore.audit - ugi=root ip=unknown-ip-addr cmd=get_database: @hive#default

2023-04-12T01:34:59,984 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore - 0: get_database: @hive#default

2023-04-12T01:34:59,984 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore.audit - ugi=root ip=unknown-ip-addr cmd=get_database: @hive#default

2023-04-12T01:34:59,993 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore - 0: get_tables: db=@hive#default pat=*

2023-04-12T01:34:59,993 INFO [main] org.apache.hadoop.hive.metastore.HiveMetaStore.audit - ugi=root ip=unknown-ip-addr cmd=get_tables: db=@hive#default pat=*

+---------+---------+-----------+

|namespace|tableName|isTemporary|

+---------+---------+-----------+

| default| employee| false|

+---------+---------+-----------+