[Hive] Metastore in Azure Databricks

在開發 Spark 與 Deltalake 的應用的時候,需要建立很多的 Table 與 Database 等資源,這些 Table 的資源究竟是怎麼管理的?就是 Hive Metastore 的角色,我們在很自然使用 Spark SQL 的時候,是否真正了解背後發生了什麼事情?本篇我們紀錄如何在 Azure Databricks 上面使用客製化的 Hive Metastore。

利用 Azure SQL Database 當成 Databricks 的 Metastore (Hive Metastore 1.2.1 以下) 連結

文章嘗試利用 Azure SQL Database 當成是 Databricks External 的 Metastore,再按照文章先設定 SparkConf 如下:

spark.sql.hive.metastore.version 1.2.1

spark.hadoop.javax.jdo.option.ConnectionUserName user@sqlserver

spark.hadoop.javax.jdo.option.ConnectionURL jdbc:sqlserver://sqlserver.database.windows.net:1433;database=metastore

spark.hadoop.javax.jdo.option.ConnectionPassword password

spark.hadoop.javax.jdo.option.ConnectionDriverName com.microsoft.sqlserver.jdbc.SQLServerDriver

spark.sql.hive.metastore.jars maven接著利用 Notebook 執行 CREATE DATABASE test 卻爆出以下的錯誤訊息:

com.databricks.backend.common.rpc.SparkDriverExceptions$SQLExecutionException: org.apache.spark.sql.AnalysisException: org.apache.hadoop.hive.ql.metadata.HiveException: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient

at org.apache.spark.sql.hive.HiveExternalCatalog.$anonfun$withClient$2(HiveExternalCatalog.scala:160)

at org.apache.spark.sql.hive.HiveExternalCatalog.maybeSynchronized(HiveExternalCatalog.scala:112)

at org.apache.spark.sql.hive.HiveExternalCatalog.$anonfun$withClient$1(HiveExternalCatalog.scala:150)

at com.databricks.backend.daemon.driver.ProgressReporter$.withStatusCode(ProgressReporter.scala:377)

at com.databricks.backend.daemon.driver.ProgressReporter$.withStatusCode(ProgressReporter.scala:363)

at com.databricks.spark.util.SparkDatabricksProgressReporter$.withStatusCode(ProgressReporter.scala:34)

at org.apache.spark.sql.hive.HiveExternalCatalog.withClient(HiveExternalCatalog.scala:149)

at org.apache.spark.sql.hive.HiveExternalCatalog.databaseExists(HiveExternalCatalog.scala:310)

at org.apache.spark.sql.internal.SharedState.externalCatalog$lzycompute(SharedState.scala:228)

at org.apache.spark.sql.internal.SharedState.externalCatalog(SharedState.scala:218)

at org.apache.spark.sql.hive.HiveSessionStateBuilder.externalCatalog(HiveSessionStateBuilder.scala:59)

at org.apache.spark.sql.hive.HiveSessionStateBuilder.$anonfun$hiveCatalog$1(HiveSessionStateBuilder.scala:74)

at org.apache.spark.sql.catalyst.catalog.SessionCatalogImpl.externalCatalog$lzycompute(SessionCatalog.scala:544)

at org.apache.spark.sql.catalyst.catalog.SessionCatalogImpl.externalCatalog(SessionCatalog.scala:544)

at org.apache.spark.sql.catalyst.catalog.SessionCatalogImpl.databaseExists(SessionCatalog.scala:763)

at com.databricks.sql.managedcatalog.ManagedCatalogSessionCatalog.databaseExists(ManagedCatalogSessionCatalog.scala:577)

at com.databricks.sql.managedcatalog.UnityCatalogV2Proxy.$anonfun$namespaceExists$1(UnityCatalogV2Proxy.scala:120)

at com.databricks.sql.managedcatalog.UnityCatalogV2Proxy.$anonfun$namespaceExists$1$adapted(UnityCatalogV2Proxy.scala:120)

at com.databricks.sql.managedcatalog.UnityCatalogV2Proxy.assertSingleNamespace(UnityCatalogV2Proxy.scala:114)

at com.databricks.sql.managedcatalog.UnityCatalogV2Proxy.namespaceExists(UnityCatalogV2Proxy.scala:120)

at org.apache.spark.sql.execution.datasources.v2.CreateNamespaceExec.run(CreateNamespaceExec.scala:43)

at org.apache.spark.sql.execution.datasources.v2.V2CommandExec.result$lzycompute(V2CommandExec.scala:43)

at org.apache.spark.sql.execution.datasources.v2.V2CommandExec.result(V2CommandExec.scala:43)

at org.apache.spark.sql.execution.datasources.v2.V2CommandExec.executeCollect(V2CommandExec.scala:49)

at org.apache.spark.sql.execution.QueryExecution$anonfun$nestedInanonfun$eagerlyExecuteCommands$1$1.$anonfun$applyOrElse$1(QueryExecution.scala:202)

at org.apache.spark.sql.execution.SQLExecution$.$anonfun$withCustomExecutionEnv$8(SQLExecution.scala:240)

at org.apache.spark.sql.execution.SQLExecution$.withSQLConfPropagated(SQLExecution.scala:388)

at org.apache.spark.sql.execution.SQLExecution$.$anonfun$withCustomExecutionEnv$1(SQLExecution.scala:187)

at org.apache.spark.sql.SparkSession.withActive(SparkSession.scala:973)

at org.apache.spark.sql.execution.SQLExecution$.withCustomExecutionEnv(SQLExecution.scala:142)

at org.apache.spark.sql.execution.SQLExecution$.withNewExecutionId(SQLExecution.scala:338)

at org.apache.spark.sql.execution.QueryExecution$anonfun$nestedInanonfun$eagerlyExecuteCommands$1$1.applyOrElse(QueryExecution.scala:202)

at org.apache.spark.sql.execution.QueryExecution$anonfun$nestedInanonfun$eagerlyExecuteCommands$1$1.applyOrElse(QueryExecution.scala:198)

at org.apache.spark.sql.catalyst.trees.TreeNode.$anonfun$transformDownWithPruning$1(TreeNode.scala:591)

at org.apache.spark.sql.catalyst.trees.CurrentOrigin$.withOrigin(TreeNode.scala:178)

at org.apache.spark.sql.catalyst.trees.TreeNode.transformDownWithPruning(TreeNode.scala:591)

at org.apache.spark.sql.catalyst.plans.logical.LogicalPlan.org$apache$spark$sql$catalyst$plans$logical$AnalysisHelper$super$transformDownWithPruning(LogicalPlan.scala:31)

at org.apache.spark.sql.catalyst.plans.logical.AnalysisHelper.transformDownWithPruning(AnalysisHelper.scala:268)

at org.apache.spark.sql.catalyst.plans.logical.AnalysisHelper.transformDownWithPruning$(AnalysisHelper.scala:264)

at org.apache.spark.sql.catalyst.plans.logical.LogicalPlan.transformDownWithPruning(LogicalPlan.scala:31)

at org.apache.spark.sql.catalyst.plans.logical.LogicalPlan.transformDownWithPruning(LogicalPlan.scala:31)

at org.apache.spark.sql.catalyst.trees.TreeNode.transformDown(TreeNode.scala:567)

at org.apache.spark.sql.execution.QueryExecution.$anonfun$eagerlyExecuteCommands$1(QueryExecution.scala:198)

at org.apache.spark.sql.catalyst.plans.logical.AnalysisHelper$.allowInvokingTransformsInAnalyzer(AnalysisHelper.scala:324)

at org.apache.spark.sql.execution.QueryExecution.eagerlyExecuteCommands(QueryExecution.scala:198)

at org.apache.spark.sql.execution.QueryExecution.commandExecuted$lzycompute(QueryExecution.scala:183)

at org.apache.spark.sql.execution.QueryExecution.commandExecuted(QueryExecution.scala:174)

at org.apache.spark.sql.Dataset.<init>(Dataset.scala:237)

at org.apache.spark.sql.Dataset$.$anonfun$ofRows$2(Dataset.scala:106)

at org.apache.spark.sql.SparkSession.withActive(SparkSession.scala:973)

at org.apache.spark.sql.Dataset$.ofRows(Dataset.scala:103)

at org.apache.spark.sql.SparkSession.$anonfun$sql$1(SparkSession.scala:808)

at org.apache.spark.sql.SparkSession.withActive(SparkSession.scala:973)

at org.apache.spark.sql.SparkSession.sql(SparkSession.scala:803)

at org.apache.spark.sql.SQLContext.sql(SQLContext.scala:695)

at com.databricks.backend.daemon.driver.SQLDriverLocal.$anonfun$executeSql$1(SQLDriverLocal.scala:91)

at scala.collection.immutable.List.map(List.scala:293)

at com.databricks.backend.daemon.driver.SQLDriverLocal.executeSql(SQLDriverLocal.scala:37)

at com.databricks.backend.daemon.driver.SQLDriverLocal.repl(SQLDriverLocal.scala:145)

at com.databricks.backend.daemon.driver.DriverLocal.$anonfun$execute$20(DriverLocal.scala:668)

at com.databricks.unity.EmptyHandle$.runWith(UCSHandle.scala:41)

at com.databricks.backend.daemon.driver.DriverLocal.$anonfun$execute$18(DriverLocal.scala:668)

at com.databricks.logging.Log4jUsageLoggingShim$.$anonfun$withAttributionContext$1(Log4jUsageLoggingShim.scala:32)

at scala.util.DynamicVariable.withValue(DynamicVariable.scala:62)

at com.databricks.logging.AttributionContext$.withValue(AttributionContext.scala:94)

at com.databricks.logging.Log4jUsageLoggingShim$.withAttributionContext(Log4jUsageLoggingShim.scala:30)

at com.databricks.logging.UsageLogging.withAttributionContext(UsageLogging.scala:283)

at com.databricks.logging.UsageLogging.withAttributionContext$(UsageLogging.scala:282)

at com.databricks.backend.daemon.driver.DriverLocal.withAttributionContext(DriverLocal.scala:62)

at com.databricks.logging.UsageLogging.withAttributionTags(UsageLogging.scala:318)

at com.databricks.logging.UsageLogging.withAttributionTags$(UsageLogging.scala:303)

at com.databricks.backend.daemon.driver.DriverLocal.withAttributionTags(DriverLocal.scala:62)

at com.databricks.backend.daemon.driver.DriverLocal.execute(DriverLocal.scala:645)

at com.databricks.backend.daemon.driver.DriverWrapper.$anonfun$tryExecutingCommand$1(DriverWrapper.scala:622)

at scala.util.Try$.apply(Try.scala:213)

at com.databricks.backend.daemon.driver.DriverWrapper.tryExecutingCommand(DriverWrapper.scala:614)

at com.databricks.backend.daemon.driver.DriverWrapper.executeCommandAndGetError(DriverWrapper.scala:533)

at com.databricks.backend.daemon.driver.DriverWrapper.executeCommand(DriverWrapper.scala:568)

at com.databricks.backend.daemon.driver.DriverWrapper.runInnerLoop(DriverWrapper.scala:438)

at com.databricks.backend.daemon.driver.DriverWrapper.runInner(DriverWrapper.scala:381)

at com.databricks.backend.daemon.driver.DriverWrapper.run(DriverWrapper.scala:232)

at java.lang.Thread.run(Thread.java:748)

Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient

at org.apache.hadoop.hive.ql.metadata.Hive.getDatabase(Hive.java:1305)

at org.apache.hadoop.hive.ql.metadata.Hive.databaseExists(Hive.java:1290)

at org.apache.spark.sql.hive.client.Shim_v0_12.databaseExists(HiveShim.scala:619)

at org.apache.spark.sql.hive.client.HiveClientImpl.$anonfun$databaseExists$1(HiveClientImpl.scala:435)

at scala.runtime.java8.JFunction0$mcZ$sp.apply(JFunction0$mcZ$sp.java:23)

at org.apache.spark.sql.hive.client.HiveClientImpl.$anonfun$withHiveState$1(HiveClientImpl.scala:335)

at org.apache.spark.sql.hive.client.HiveClientImpl.$anonfun$retryLocked$1(HiveClientImpl.scala:236)

at org.apache.spark.sql.hive.client.HiveClientImpl.synchronizeOnObject(HiveClientImpl.scala:272)

at org.apache.spark.sql.hive.client.HiveClientImpl.retryLocked(HiveClientImpl.scala:228)

at org.apache.spark.sql.hive.client.HiveClientImpl.withHiveState(HiveClientImpl.scala:315)

at org.apache.spark.sql.hive.client.HiveClientImpl.databaseExists(HiveClientImpl.scala:435)

at org.apache.spark.sql.hive.client.PoolingHiveClient.$anonfun$databaseExists$1(PoolingHiveClient.scala:321)

at org.apache.spark.sql.hive.client.PoolingHiveClient.$anonfun$databaseExists$1$adapted(PoolingHiveClient.scala:320)

at org.apache.spark.sql.hive.client.PoolingHiveClient.withHiveClient(PoolingHiveClient.scala:149)

at org.apache.spark.sql.hive.client.PoolingHiveClient.databaseExists(PoolingHiveClient.scala:320)

at org.apache.spark.sql.hive.HiveExternalCatalog.$anonfun$databaseExists$1(HiveExternalCatalog.scala:310)

at scala.runtime.java8.JFunction0$mcZ$sp.apply(JFunction0$mcZ$sp.java:23)

at com.databricks.spark.util.FrameProfiler$.record(FrameProfiler.scala:80)

at org.apache.spark.sql.hive.HiveExternalCatalog.$anonfun$withClient$2(HiveExternalCatalog.scala:151)

... 81 more

Caused by: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient

at org.apache.hadoop.hive.metastore.MetaStoreUtils.newInstance(MetaStoreUtils.java:1523)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.<init>(RetryingMetaStoreClient.java:86)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:132)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:104)

at org.apache.hadoop.hive.ql.metadata.Hive.createMetaStoreClient(Hive.java:3005)

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:3024)

at org.apache.hadoop.hive.ql.metadata.Hive.getDatabase(Hive.java:1301)

... 99 more

Caused by: java.lang.reflect.InvocationTargetException

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.hadoop.hive.metastore.MetaStoreUtils.newInstance(MetaStoreUtils.java:1521)

... 105 more

Caused by: javax.jdo.JDODataStoreException: Required table missing : "VERSION" in Catalog "" Schema "". DataNucleus requires this table to perform its persistence operations. Either your MetaData is incorrect, or you need to enable "datanucleus.autoCreateTables"

NestedThrowables:

org.datanucleus.store.rdbms.exceptions.MissingTableException: Required table missing : "VERSION" in Catalog "" Schema "". DataNucleus requires this table to perform its persistence operations. Either your MetaData is incorrect, or you need to enable "datanucleus.autoCreateTables"

at org.datanucleus.api.jdo.NucleusJDOHelper.getJDOExceptionForNucleusException(NucleusJDOHelper.java:461)

at org.datanucleus.api.jdo.JDOPersistenceManager.jdoMakePersistent(JDOPersistenceManager.java:732)

at org.datanucleus.api.jdo.JDOPersistenceManager.makePersistent(JDOPersistenceManager.java:752)

at org.apache.hadoop.hive.metastore.ObjectStore.setMetaStoreSchemaVersion(ObjectStore.java:6773)

at org.apache.hadoop.hive.metastore.ObjectStore.checkSchema(ObjectStore.java:6670)

at org.apache.hadoop.hive.metastore.ObjectStore.verifySchema(ObjectStore.java:6645)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.RawStoreProxy.invoke(RawStoreProxy.java:114)

at com.sun.proxy.$Proxy86.verifySchema(Unknown Source)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.getMS(HiveMetaStore.java:572)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultDB(HiveMetaStore.java:624)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.init(HiveMetaStore.java:461)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.<init>(RetryingHMSHandler.java:66)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.getProxy(RetryingHMSHandler.java:72)

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:5768)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:199)

at org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient.<init>(SessionHiveMetaStoreClient.java:74)

... 110 more

Caused by: org.datanucleus.store.rdbms.exceptions.MissingTableException: Required table missing : "VERSION" in Catalog "" Schema "". DataNucleus requires this table to perform its persistence operations. Either your MetaData is incorrect, or you need to enable "datanucleus.autoCreateTables"

at org.datanucleus.store.rdbms.table.AbstractTable.exists(AbstractTable.java:485)

at org.datanucleus.store.rdbms.RDBMSStoreManager$ClassAdder.performTablesValidation(RDBMSStoreManager.java:3380)

at org.datanucleus.store.rdbms.RDBMSStoreManager$ClassAdder.addClassTablesAndValidate(RDBMSStoreManager.java:3190)

at org.datanucleus.store.rdbms.RDBMSStoreManager$ClassAdder.run(RDBMSStoreManager.java:2841)

at org.datanucleus.store.rdbms.AbstractSchemaTransaction.execute(AbstractSchemaTransaction.java:122)

at org.datanucleus.store.rdbms.RDBMSStoreManager.addClasses(RDBMSStoreManager.java:1605)

at org.datanucleus.store.AbstractStoreManager.addClass(AbstractStoreManager.java:954)

at org.datanucleus.store.rdbms.RDBMSStoreManager.getDatastoreClass(RDBMSStoreManager.java:679)

at org.datanucleus.store.rdbms.RDBMSStoreManager.getPropertiesForGenerator(RDBMSStoreManager.java:2045)

at org.datanucleus.store.AbstractStoreManager.getStrategyValue(AbstractStoreManager.java:1365)

at org.datanucleus.ExecutionContextImpl.newObjectId(ExecutionContextImpl.java:3827)

at org.datanucleus.state.JDOStateManager.setIdentity(JDOStateManager.java:2571)

at org.datanucleus.state.JDOStateManager.initialiseForPersistentNew(JDOStateManager.java:513)

at org.datanucleus.state.ObjectProviderFactoryImpl.newForPersistentNew(ObjectProviderFactoryImpl.java:232)

at org.datanucleus.ExecutionContextImpl.newObjectProviderForPersistentNew(ExecutionContextImpl.java:1414)

at org.datanucleus.ExecutionContextImpl.persistObjectInternal(ExecutionContextImpl.java:2218)

at org.datanucleus.ExecutionContextImpl.persistObjectWork(ExecutionContextImpl.java:2065)

at org.datanucleus.ExecutionContextImpl.persistObject(ExecutionContextImpl.java:1913)

at org.datanucleus.ExecutionContextThreadedImpl.persistObject(ExecutionContextThreadedImpl.java:217)

at org.datanucleus.api.jdo.JDOPersistenceManager.jdoMakePersistent(JDOPersistenceManager.java:727)

... 128 more

at com.databricks.backend.daemon.driver.SQLDriverLocal.executeSql(SQLDriverLocal.scala:130)

at com.databricks.backend.daemon.driver.SQLDriverLocal.repl(SQLDriverLocal.scala:145)

at com.databricks.backend.daemon.driver.DriverLocal.$anonfun$execute$20(DriverLocal.scala:668)

at com.databricks.unity.EmptyHandle$.runWith(UCSHandle.scala:41)

at com.databricks.backend.daemon.driver.DriverLocal.$anonfun$execute$18(DriverLocal.scala:668)

at com.databricks.logging.Log4jUsageLoggingShim$.$anonfun$withAttributionContext$1(Log4jUsageLoggingShim.scala:32)

at scala.util.DynamicVariable.withValue(DynamicVariable.scala:62)

at com.databricks.logging.AttributionContext$.withValue(AttributionContext.scala:94)

at com.databricks.logging.Log4jUsageLoggingShim$.withAttributionContext(Log4jUsageLoggingShim.scala:30)

at com.databricks.logging.UsageLogging.withAttributionContext(UsageLogging.scala:283)

at com.databricks.logging.UsageLogging.withAttributionContext$(UsageLogging.scala:282)

at com.databricks.backend.daemon.driver.DriverLocal.withAttributionContext(DriverLocal.scala:62)

at com.databricks.logging.UsageLogging.withAttributionTags(UsageLogging.scala:318)

at com.databricks.logging.UsageLogging.withAttributionTags$(UsageLogging.scala:303)

at com.databricks.backend.daemon.driver.DriverLocal.withAttributionTags(DriverLocal.scala:62)

at com.databricks.backend.daemon.driver.DriverLocal.execute(DriverLocal.scala:645)

at com.databricks.backend.daemon.driver.DriverWrapper.$anonfun$tryExecutingCommand$1(DriverWrapper.scala:622)

at scala.util.Try$.apply(Try.scala:213)

at com.databricks.backend.daemon.driver.DriverWrapper.tryExecutingCommand(DriverWrapper.scala:614)

at com.databricks.backend.daemon.driver.DriverWrapper.executeCommandAndGetError(DriverWrapper.scala:533)

at com.databricks.backend.daemon.driver.DriverWrapper.executeCommand(DriverWrapper.scala:568)

at com.databricks.backend.daemon.driver.DriverWrapper.runInnerLoop(DriverWrapper.scala:438)

at com.databricks.backend.daemon.driver.DriverWrapper.runInner(DriverWrapper.scala:381)

at com.databricks.backend.daemon.driver.DriverWrapper.run(DriverWrapper.scala:232)

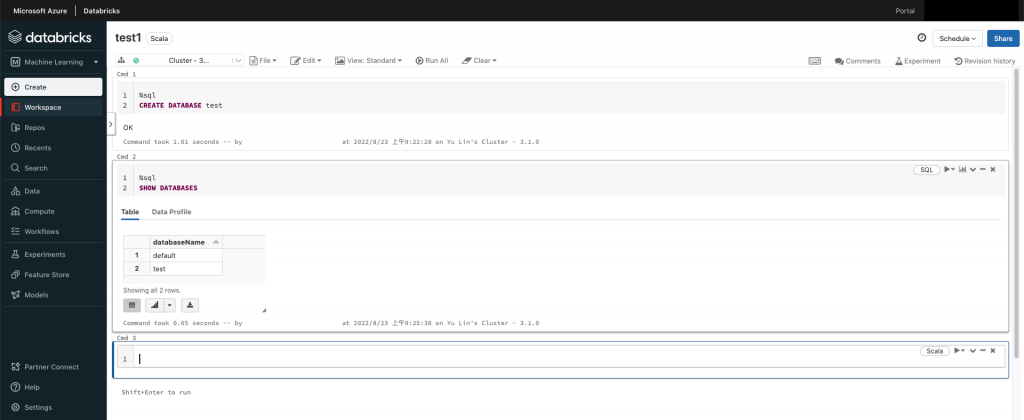

at java.lang.Thread.run(Thread.java:748)解決方法是在 Spark Conf 裡面在外加以下三個 conf 設定,之後就可以成功創建 Database 與 Table 了。

datanucleus.autoCreateSchema true

datanucleus.fixedDatastore false

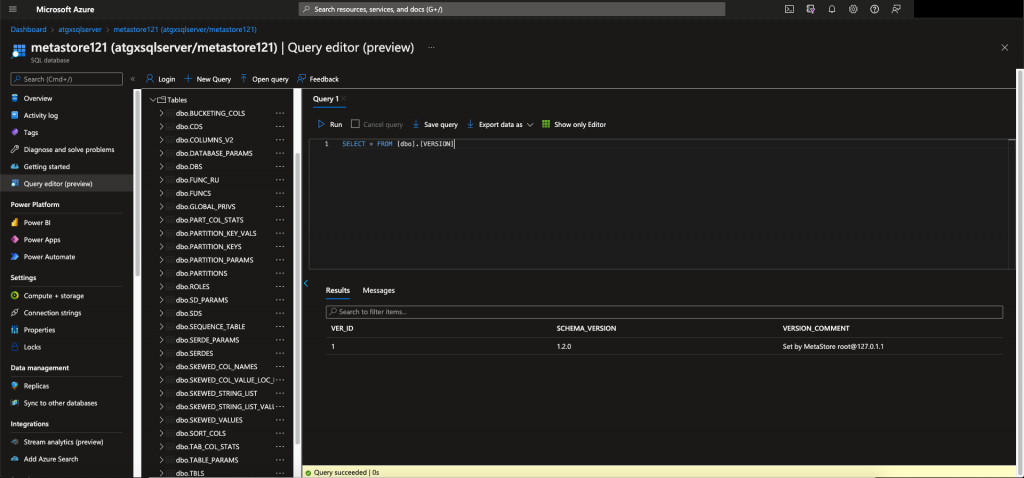

datanucleus.autoCreateTables true觀察在 SQL Database 內部的表格,發現大部分的 Tables 都有被自動建出來,另外 dbo.VERSION 這個表格內存有一個 SCHEMA VERSION = 1.2.0 的紀錄。

Note: 看到這個錯誤訊息的時候,又看倒 Azure Databricks 關於 Hive Meta Version 與 Runtimes 版號的配對,以為是因為 spark.sql.hive.metastore.version 設定錯誤才會導致無法初始化SessionHiveMetaStoreClient,將版本提升到 3.1.0 之後還是無法成功創建 Database,關於如何使用較新的 Hive Metastore Version 會在下方陳述。

利用 Azure SQL Database 當成 Databricks 的 Metastore (Hive Metastore 2.0 以上) 連結

在 Azure 官方網站上我們觀察到 Databricks 也支援 Hive Metastore 2.0 以上的版本,如上備註所講所以嘗試實作將 Spark conf 裡面的 spark.sql.hive.metastore.version 改成 2.3.7 或是 3.1.0 都會遇到以下的沒有辦法初始化 SessionHiveMetaStoreClien 的錯誤訊息:

AnalysisException: org.apache.hadoop.hive.ql.metadata.HiveException: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient

Caused by: HiveException: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient

Caused by: RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient

Caused by: InvocationTargetException:

Caused by: MetaException: Version information not found in metastore.

<div class="ansiout"> at org.apache.spark.sql.hive.HiveExternalCatalog.$anonfun$withClient$2(HiveExternalCatalog.scala:160)

at org.apache.spark.sql.hive.HiveExternalCatalog.maybeSynchronized(HiveExternalCatalog.scala:112)

at org.apache.spark.sql.hive.HiveExternalCatalog.$anonfun$withClient$1(HiveExternalCatalog.scala:150)

at com.databricks.backend.daemon.driver.ProgressReporter$.withStatusCode(ProgressReporter.scala:377)

at com.databricks.backend.daemon.driver.ProgressReporter$.withStatusCode(ProgressReporter.scala:363)

at com.databricks.spark.util.SparkDatabricksProgressReporter$.withStatusCode(ProgressReporter.scala:34)

at org.apache.spark.sql.hive.HiveExternalCatalog.withClient(HiveExternalCatalog.scala:149)

at org.apache.spark.sql.hive.HiveExternalCatalog.databaseExists(HiveExternalCatalog.scala:310)

at org.apache.spark.sql.internal.SharedState.externalCatalog$lzycompute(SharedState.scala:228)

at org.apache.spark.sql.internal.SharedState.externalCatalog(SharedState.scala:218)

at org.apache.spark.sql.hive.HiveSessionStateBuilder.externalCatalog(HiveSessionStateBuilder.scala:59)

at org.apache.spark.sql.hive.HiveSessionStateBuilder.$anonfun$hiveCatalog$1(HiveSessionStateBuilder.scala:74)

at org.apache.spark.sql.catalyst.catalog.SessionCatalogImpl.externalCatalog$lzycompute(SessionCatalog.scala:544)

at org.apache.spark.sql.catalyst.catalog.SessionCatalogImpl.externalCatalog(SessionCatalog.scala:544)

at org.apache.spark.sql.catalyst.catalog.SessionCatalogImpl.listDatabases(SessionCatalog.scala:771)

at com.databricks.sql.managedcatalog.ManagedCatalogSessionCatalog.listDatabasesWithCatalog(ManagedCatalogSessionCatalog.scala:601)

at com.databricks.sql.managedcatalog.UnityCatalogV2Proxy.listNamespaces(UnityCatalogV2Proxy.scala:124)

at org.apache.spark.sql.execution.datasources.v2.ShowNamespacesExec.run(ShowNamespacesExec.scala:42)

at org.apache.spark.sql.execution.datasources.v2.V2CommandExec.result$lzycompute(V2CommandExec.scala:43)

at org.apache.spark.sql.execution.datasources.v2.V2CommandExec.result(V2CommandExec.scala:43)

at org.apache.spark.sql.execution.datasources.v2.V2CommandExec.executeCollect(V2CommandExec.scala:49)嘗試很多方法之後,我們在 External Apache Hive metastore, Azure Databricks, Azure SQL 這篇文章裡面找到解答,只要將 Spark Conf 內兩個 property 拿掉,另外加上 datanucleus.schema.autoCreateTables true,就可以成功創建 Database 與 Table。

spark.sql.hive.metastore.jars maven

spark.sql.hive.metastore.version 1.2.1備註:此時 Hive Metastore 3.1.0 的 Sql Database 內的 dbo.VERSION 裡面是沒有 record 的。

利用自建的 MySQL Database 當成 Hive Metastore 連結

這部分我們會另外花一篇來講解。

Unity Catalog

直到目前為止,我們只能夠創建 Databases 與 Tables,但是如果我們想要的架構是 three-level schema 架構的話,我們就會需要 Unity Catalog。